StepFun AI team recently launched the new audio large language model Step-Audio-R1, which can effectively utilize computational resources during generation and reasoning, solving the problem of accuracy decline in current audio AI models when handling long reasoning chains. The research team pointed out that this issue is not an inherent limitation of audio models, but rather due to the use of text-based reasoning during training.

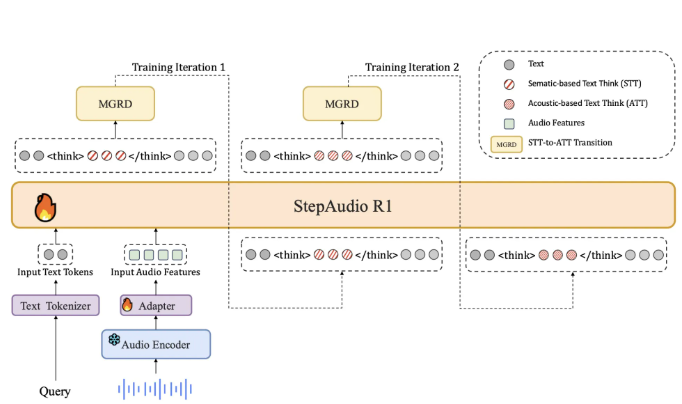

Most current audio models rely mainly on text data during training, causing their reasoning process to resemble reading text rather than actually listening to sound. StepFun team calls this phenomenon "text-based reasoning." To address this issue, Step-Audio-R1 requires the model to reason based on audio evidence when generating answers. This approach is achieved through a training method called "modal reasoning distillation," which specifically selects and refines reasoning paths related to audio features.

In terms of architecture, Step-Audio-R1 is based on the Qwen2 audio encoder, which processes the original waveform and down-samples the output to 12.5Hz through an audio adapter. Then, the Qwen2.532B decoder consumes the audio features and generates text. When generating answers, the model always produces clear reasoning blocks within specific tags, ensuring that the structure and content of the reasoning are optimized without affecting task accuracy.

During training, the model went through a supervised cold start phase and a reinforcement learning phase, involving a mix of text and audio tasks. In the cold start phase, the team used 5 million samples, covering 100 million text tokens and 4 billion audio paired data. In this phase, the model learned how to generate reasoning useful for both audio and text, establishing basic reasoning capabilities.

Through multiple rounds of "modal reasoning distillation," the research team extracted real acoustic features from audio questions and further optimized the model's reasoning ability using reinforcement learning. Step-Audio-R1 performed well in multiple audio understanding and reasoning benchmark tests, with its overall score close to the industry-leading Gemini3Pro model.

Paper: https://arxiv.org/pdf/2511.15848

Key Points:

🔊 Step-Audio-R1 developed by StepFun AI solves the accuracy decline issue in audio reasoning, using a modal reasoning distillation method.

📈 The model is based on the Qwen2 architecture and can clearly distinguish between the thinking process and final answer during reasoning, improving audio processing capabilities.

🏆 Step-Audio-R1 has outperformed Gemini2.5Pro in multiple benchmarks and is comparable to Gemini3Pro.