At a recent technology launch event, the vLLM team officially introduced vLLM-Omni, a reasoning framework designed for omni-modality models. This new framework aims to simplify the process of multi-modal reasoning and provide strong support for next-generation models that can understand and generate various types of content. Unlike traditional text input and output models, vLLM-Omni can handle multiple input and output types, including text, images, audio, and video.

Since the project's initiation, the vLLM team has been dedicated to providing efficient reasoning capabilities for large language models (LLMs), especially in terms of throughput and memory usage. However, modern generative models have gone beyond single-text interactions, and the demand for diverse reasoning capabilities has gradually become a trend. vLLM-Omni was born in this context and is one of the first open-source frameworks supporting omni-modal reasoning.

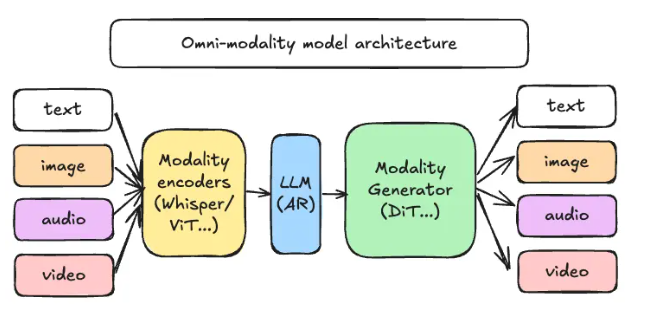

vLLM-Omni adopts a brand-new decoupled pipeline architecture, which efficiently distributes and coordinates reasoning tasks across different stages by redesigning the data flow. In this architecture, reasoning requests primarily pass through three key components: the modal encoder, the LLM core, and the modal generator. The modal encoder is responsible for converting multi-modal inputs into vector representations, the LLM core handles text generation and multi-turn conversations, while the modal generator is used to output image, audio, or video content.

The introduction of this innovative architecture brings many conveniences for engineering teams, allowing them to independently scale and design resource deployment at different stages. Additionally, the team can adjust resource allocation according to actual business needs, thereby improving overall work efficiency.

GitHub: https://github.com/vllm-project/vllm-omni

Key points:

🌟 vLLM-Omni is a new reasoning framework that supports multi-modal models to handle various types of content, including text, images, audio, and video.

⚙️ The framework uses a decoupled pipeline architecture, improving reasoning efficiency and allowing resource optimization for different tasks.

📚 Open source code and documentation are now available, welcome developers to participate in exploring and applying this new technology.