NVIDIA has recently introduced a new method called ToolOrchestra, aimed at improving AI systems' ability to select appropriate models and tools, avoiding reliance on traditional single large models. This method trains a small language model called Orchestrator-8B as the "brain" of a multi-tool using agent, achieving more efficient task processing.

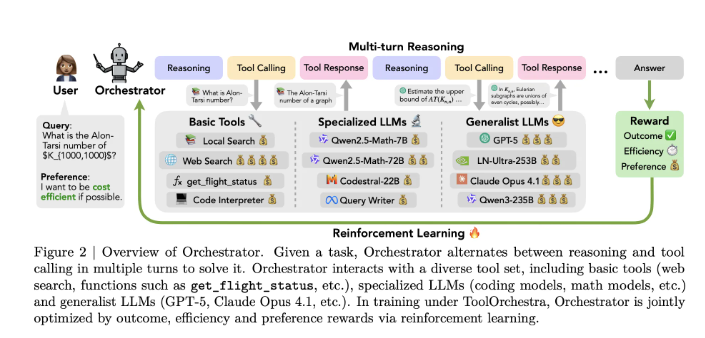

Currently, most AI agents use a single large model, such as GPT-5, to choose tools and complete tasks based on prompts. However, research shows that this approach can lead the model to favor its own capabilities during decision-making, causing resource waste. To address this, ToolOrchestra designs a specialized control model called Orchestrator-8B, which uses reinforcement learning to optimize tool selection.

Orchestrator-8B is a decoder with 800 million parameters, using only the Transformer architecture, and is fine-tuned from the Qwen3-8B model. Its workflow consists of three main steps: first, the model parses user instructions and optional natural language preferences, such as prioritizing low latency or avoiding web searches; next, it generates a reasoning process and plans actions; finally, it selects from available tools and issues tool calls in a unified JSON format. This process continues until the task is completed or a limit of 50 steps is reached.

The reinforcement learning design of ToolOrchestra includes multiple reward mechanisms to ensure efficient task completion. Specifically, the model's reward consists of three parts: a binary reward for task success, an efficiency reward (targeting cost and time), and a user preference reward. These factors combined help optimize the strategy, making Orchestrator-8B more flexible when selecting and using tools.

In a series of benchmark tests, Orchestrator-8B performed excellently. For example, in the "Human Last Exam," its accuracy rate was 37.1%, higher than GPT-5's 35.1%. In terms of efficiency, Orchestrator-8B's average cost was only $0.092, and the time was 8.2 minutes, far lower than GPT-5's $0.302 and 19.8 minutes. This indicates that Orchestrator-8B performs better in resource utilization and task processing, making it suitable for teams focused on efficiency and cost.

NVIDIA's ToolOrchestra marks an important step in building complex AI systems by training specific routing strategies, significantly improving the efficiency and accuracy of task processing.

Paper: https://arxiv.org/pdf/2511.21689

Key Points:

🧠 Orchestrator-8B is a small control model with 800 million parameters developed by NVIDIA, aiming to optimize the efficiency of multi-tool usage.

💡 Through reinforcement learning, Orchestrator-8B can more flexibly select and call tools, reducing resource waste.

📊 In multiple benchmark tests, Orchestrator-8B outperformed traditional large models like GPT-5 in both accuracy and efficiency.