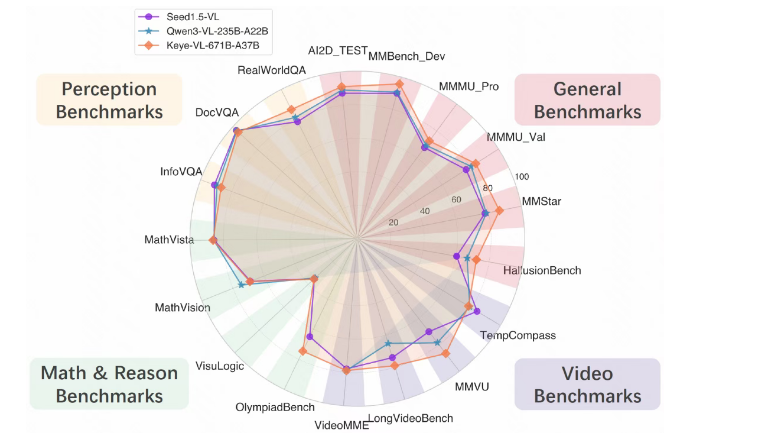

Kuaishou has officially launched its next-generation flagship multimodal model Keye-VL-671B-A37B and simultaneously opened the code. This model, with its "good at seeing and thinking" characteristics, has shown outstanding performance in multiple core benchmarks such as general visual understanding, video analysis, and mathematical reasoning, further strengthening Kuaishou's technical strength in the field of artificial intelligence.

The design concept of Keye-VL-671B-A37B is to achieve a higher level of multimodal understanding and complex reasoning. The model systematically upgrades visual perception, cross-modal alignment, and complex reasoning pathways on top of the strong general capabilities of the base model, thereby improving its response accuracy and stability in various scenarios. This means that whether for daily applications or high-difficulty tasks, Keye-VL-671B-A37B can provide more accurate results.

In terms of technical architecture, Keye-VL-671B-A37B uses DeepSeek-V3-Terminus as the large language model base and connects it through MLP layers with the visual model KeyeViT, which is initialized based on Keye-VL-1.5. The pre-training process consists of three stages to systematically build its multimodal understanding and reasoning capabilities. By using 300B high-quality pre-training data that has been strictly filtered, Keye-VL-671B-A37B ensures solid visual understanding capabilities while controlling computational costs.

The specific training process includes freezing the parameters of the visual and language models for initial alignment training, then opening all parameters for full pre-training, and finally conducting annealing training on higher quality data, significantly enhancing the model's fine-grained perception ability. In addition, the post-training process includes supervised fine-tuning, cold start, and reinforcement learning steps. The training tasks cover areas such as visual question answering, chart understanding, and rich text OCR.

Kuaishou stated that in the future, Keye-VL will continue to enhance the basic model capabilities and further integrate multimodal Agent capabilities, developing towards a more intelligent form that "can use tools and solve complex problems." The model's multi-turn tool calling capabilities will be strengthened, enabling it to autonomously call external tools during practical tasks to complete complex searches, reasoning, and information integration. Additionally, Keye-VL will deeply explore key directions such as "think with image" and "think with video," allowing the model not only to understand images and videos but also to conduct deep thinking and chain reasoning around these contents.

Through the dual drive of basic capabilities and Agent capabilities, Kuaishou's Keye-VL aims to continuously expand the limits of multimodal intelligence, moving towards the next generation of multimodal systems that are more general, reliable, and capable of stronger reasoning. This innovation will bring new opportunities and challenges to the development of multimodal AI.