AMD, in collaboration with IBM and AI startup Zyphra, has launched ZAYA1 - the world's first MoE foundational model fully trained on AMD hardware. It is pre-trained on 14T tokens, with comprehensive performance comparable to the Qwen3 series. Its mathematical/STEM reasoning capabilities without instruction fine-tuning can approach those of Qwen3 Professional.

Training Scale

- Cluster: 128 IBM Cloud nodes × 8 AMD Instinct MI300X cards, totaling 1024 cards; InfinityFabric + ROCm, peak performance of 750 PFLOPs

- Data: 14T tokens, curriculum learning from general web pages → mathematics/code/reasoning; later training versions will be released separately

Architecture Innovations

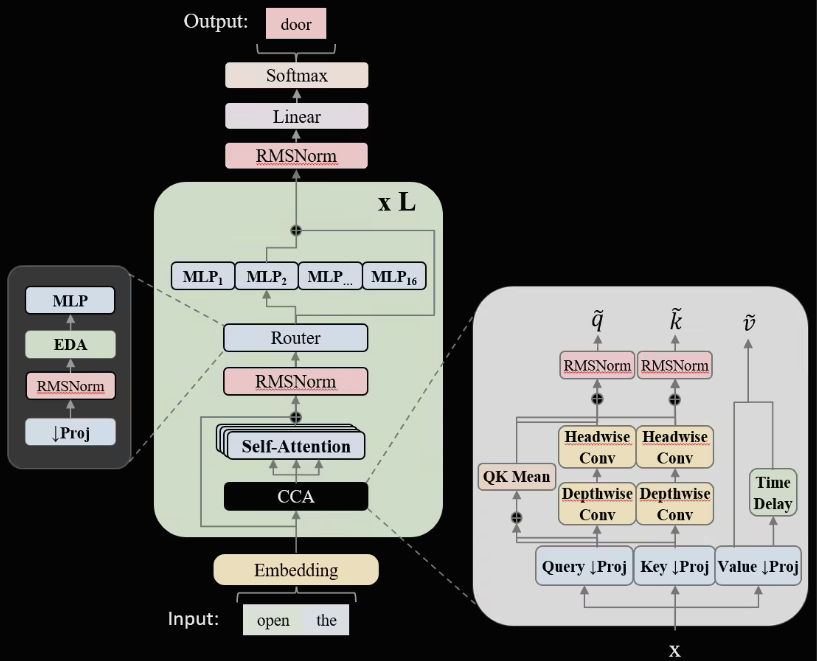

1. CCA Attention: Convolution + compressed embedding attention heads, reducing memory usage by 32% and increasing long context throughput by 18%

2. Linear Routing MoE: refined expert granularity + load balancing regularization, improving Top-2 routing accuracy by 2.3pp, maintaining high utilization even when sparsity reaches 70%

Benchmark Results

ZAYA1-Base (non-instructed version) matches Qwen3-Base on benchmarks such as MMLU-Redux, GSM-8K, MATH, and ScienceQA. It significantly outperforms on CMATH and OCW-Math, demonstrating its STEM potential. Zyphra revealed that instructed and RLHF versions will be launched in Q1 2026, with API and weight downloads available.

AMD stated that this collaboration validates the feasibility of MI300X + ROCm in large-scale MoE training. In the future, it will replicate the "pure AMD" cluster solution with more cloud providers, aiming to achieve cost parity with NVIDIA solutions when training MoE models exceeding 100B parameters by 2026.