Today, Xiaomi officially announced the release of its embodied large model MiMo-Embodied and declared that the model will be fully open-sourced. This move marks an important step for Xiaomi in the field of general embodied intelligence research.

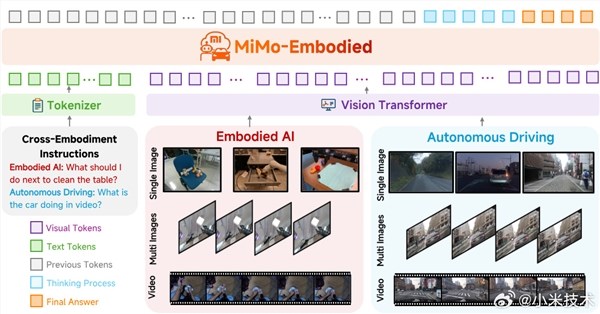

As embodied intelligence gradually takes root in home scenarios and autonomous driving technology scales up, how robots and vehicles can better achieve cognitive and capability interoperability, and whether indoor operational intelligence and outdoor driving intelligence can promote each other, have become key issues that the industry urgently needs to solve. The MiMo-Embodied model released by Xiaomi this time was developed to address these challenges. It successfully bridges the two fields of autonomous driving and embodied intelligence, achieving unified task modeling and making a key breakthrough from "vertical domain-specific" to "cross-domain capability collaboration."

The MiMo-Embodied model has three core technical highlights. First, it has cross-domain capability coverage, supporting three core tasks of embodied intelligence, namely affordance reasoning, task planning, and spatial understanding, as well as three key tasks of autonomous driving, namely environmental perception, state prediction, and driving planning, providing strong support for full-scenario intelligence. Second, the model has verified the knowledge transfer and collaborative effects between indoor interaction capabilities and road decision-making capabilities, offering new ideas for cross-scenario intelligent integration. Finally, MiMo-Embodied adopts a multi-stage training strategy called "embodied/driving capability learning CoT reasoning enhanced RL fine-tuning," effectively improving the model's deployment reliability in real environments.

In terms of performance, MiMo-Embodied has set a new performance benchmark for open-source base models in 29 core benchmark tests covering perception, decision-making, and planning, surpassing existing open-source, closed-source, and specialized models. In the field of embodied intelligence, the model achieved SOTA results on 17 benchmarks, redefining the boundaries of task planning, affordance prediction, and spatial understanding. In the field of autonomous driving, it performed excellently on 12 benchmarks, achieving a full-chain performance breakthrough in environmental perception, state prediction, and driving planning. Moreover, in the general visual language field, MiMo-Embodied also demonstrated excellent generalization ability, further achieving significant performance improvements on multiple key benchmarks while strengthening general perception and understanding capabilities.

Open source address:

https://huggingface.co/XiaomiMiMo/MiMo-Embodied-7B