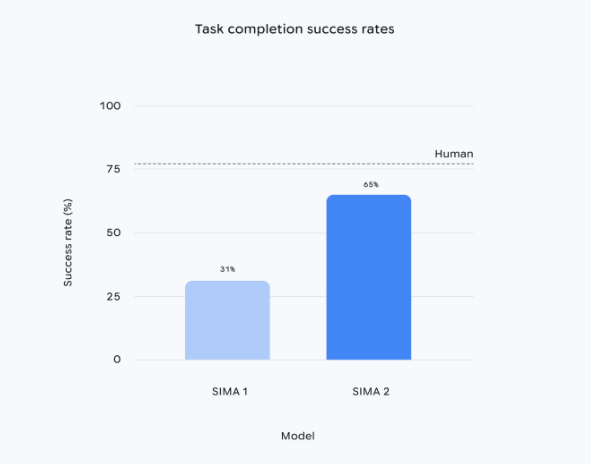

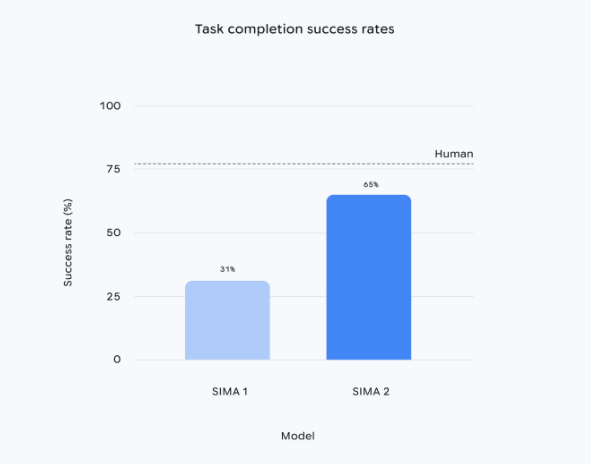

Google DeepMind has launched the multimodal agent SIMA2, based on the Gemini 2.5 Flash-lite model. The task success rate is about twice that of SIMA1, and it can complete complex instructions in new environments it has never encountered before, as well as possess self-improvement capabilities. This version is currently released as a research preview, aiming to validate high-level world understanding and reasoning abilities required for achieving general robotics and AGI.

SIMA2 continues to be pre-trained using hundreds of hours of game videos, but for the first time, it introduces a self-generated data loop: after entering a new scene, the system calls an independent Gemini model to generate tasks in bulk, which are then scored by an internal reward model. High-quality trajectories are selected for continuous fine-tuning, improving performance without additional manual annotations. The research team stated that this mechanism allows the agent to execute commands such as "go to the red house" or "cut down trees" in test environments like "No Man’s Sky" by reading environmental text, recognizing colors and symbols, and even understanding emoji combinations.

In demonstrations, DeepMind combined the generative world model Genie to generate realistic outdoor scenes for SIMA2. The agent can accurately identify objects such as benches, trees, and butterflies and interact with them. Jane Wang, a senior research scientist, stated that this "understanding the scene → inferring the goal → planning actions" loop is the essential high-level behavioral module needed to transfer virtual environment capabilities to real robots.

However, SIMA2 currently focuses on high-level decision-making and does not involve low-level controls such as mechanical joints or wheels. DeepMind simultaneously trained a robot foundation model using a different technical approach, and how the two will integrate remains undetermined. The team refused to disclose the official release date, stating only that they hope the preview will attract external collaboration to explore feasible paths for transferring virtual agents to physical robots.