Recently, the artificial intelligence department of Chinese social media company Weibo has released an open-source VibeThinker-1.5B, a large language model (LLM) with 1.5 billion parameters. The model is a fine-tuned version of Alibaba's Qwen2.5-Math-1.5B and is now freely available on Hugging Face, GitHub, and ModelScope for researchers and enterprise developers to use, even for commercial purposes, under the MIT license.

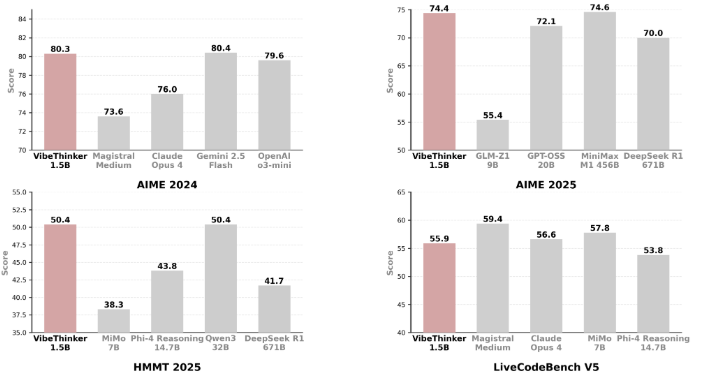

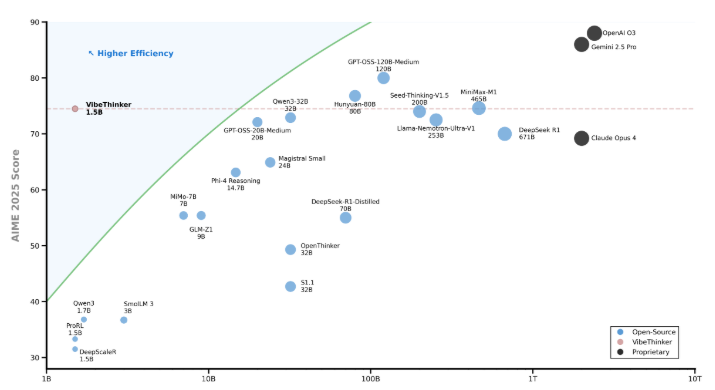

Although VibeThinker-1.5B is small in size, it performs exceptionally well in mathematical and code tasks, achieving industry-leading reasoning performance, even surpassing the R1 model from the competitor DeepSeek, which has 671 billion parameters. The model also competes with multiple large models such as Mistral AI's Magistral Medium, Anthropic's Claude Opus4, and OpenAI's gpt-oss-20B Medium, while requiring significantly less infrastructure and investment costs.

Notably, VibeThinker-1.5B only cost $7,800 in computing resources during its later training phase, a cost far lower than the hundreds of thousands or even millions of dollars required by similar or larger-scale models. The training of LLMs is divided into two stages: first, pre-training, where the model learns the structure of language and general knowledge through a large amount of text data. The second stage, later training, uses a smaller high-quality dataset to help the model better understand how to assist, reason, and align with human expectations.

VibeThinker-1.5B uses a training framework called the "Spectrum-to-Signal Principle" (SSP), which divides supervised fine-tuning and reinforcement learning into two stages. The first stage focuses on diversity, while the second stage optimizes the optimal path through reinforcement learning, allowing small models to effectively explore the reasoning space and achieve signal amplification.

In performance tests across multiple fields, VibeThinker-1.5B outperformed many large open-source and commercial models. Its open-source release challenges traditional perceptions about model parameter scale and computational power, demonstrating the possibility of small models achieving excellent performance in specific tasks.

huggingface:https://huggingface.co/WeiboAI/VibeThinker-1.5B

Key points:

📊 VibeThinker-1.5B is a 1.5 billion parameter open-source AI model launched by Weibo, performing well and even surpassing large models.

💰 The cost of later training for this model was only $7,800, far less than the tens of thousands of dollars required by similar models.

🔍 It uses the "Spectrum-to-Signal Principle" training framework, enabling small models to efficiently reason and enhance the competitiveness of small models.