Qwen3-4B series model is announced. This new model, with its compact size and powerful performance, brings new possibilities for AI deployment on edge devices.

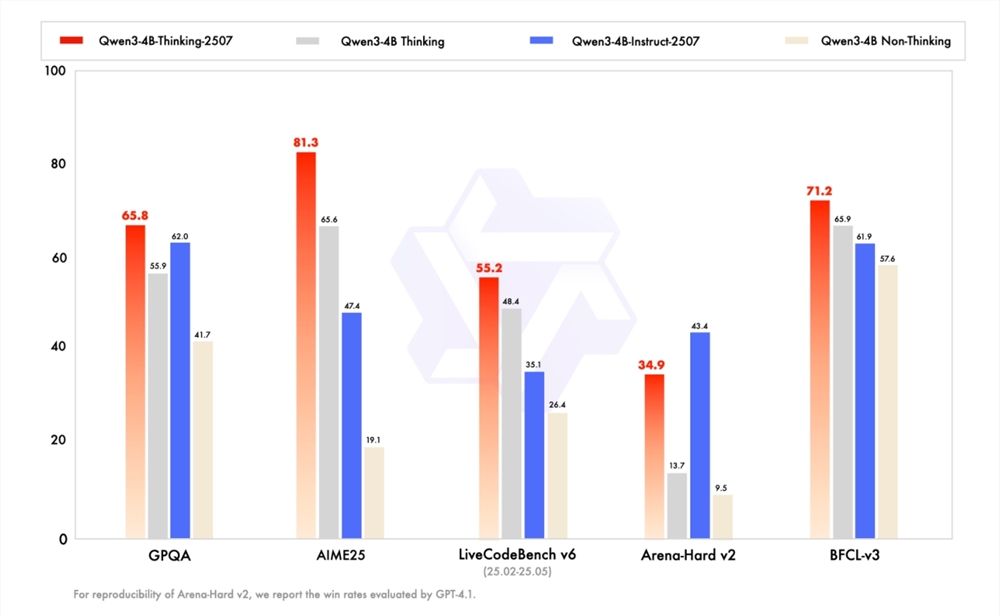

After updating Qwen3-235B-A22B and Qwen3-30B-A3B to the Instruct/Thinking "2507" version in the previous two weeks, the development team has once again made progress, releasing smaller models: Qwen3-4B-Instruct-2507 and Qwen3-4B-Thinking-2507. These new models have achieved significant performance improvements, especially in non-reasoning fields, where Qwen3-4B-Instruct-2507 fully surpasses the closed-source GPT4.1-Nano. In the reasoning field, Qwen3-4B-Thinking-2507's performance is remarkable, as its reasoning ability can be compared to the medium-sized Qwen3-30B-A3B (thinking).

The development team is confident that small language models (SLMs) hold extremely important value for the development of agent artificial intelligence (Agentic AI). The newly released "2507" version of Qwen3-4B model not only has a small size but also strong performance, and it is very friendly for deployment on edge hardware such as mobile phones. Currently, the new model has been officially open-sourced on the ModelScope community and Hugging Face, attracting the attention and experience of many developers.

The general capabilities of Qwen3-4B-Instruct-2507 have been significantly improved, exceeding commercial closed-source small-scale models like GPT-4.1-nano, and even approaching the performance of the medium-sized Qwen3-30B-A3B (non-thinking). In addition, this model covers more long-tail knowledge in various languages, enhances human preference alignment in subjective and open-ended tasks, and can provide responses that better meet people's needs. More surprisingly, its context understanding capability has been expanded to 256K, allowing even small models to easily handle long texts.

At the same time, the reasoning capabilities of Qwen3-4B-Thinking-2507 have also greatly improved. In the AIME25 evaluation focusing on mathematical abilities, this model achieved an impressive score of 81.3 with 4B parameters, a performance that can rival the medium-sized Qwen3-30B-Thinking. Additionally, its general capabilities have significantly improved, with the Agent score even surpassing the larger-sized Qwen3-30B-Thinking model. It also has the ability to understand 256K tokens, supporting more complex scenarios such as document analysis, long content generation, and cross-paragraph reasoning.

With the release of the Qwen3-4B series model, the prospects for AI applications on edge devices have become broader. We believe that in the near future, we will see more innovative applications based on this model, bringing more convenience to people's lives and work.