Today, the Meta AI Lab launched an innovative large model on the Hugging Face platform, specifically designed to verify and optimize chain-of-thought (CoT) reasoning. The model, named "CoT-Verifier" (provisional name), is built upon the Llama3.18B Instruct architecture and uses a TopK transducer mechanism, offering an unprecedented white-box approach that helps developers deeply analyze and correct error points in the AI reasoning process.

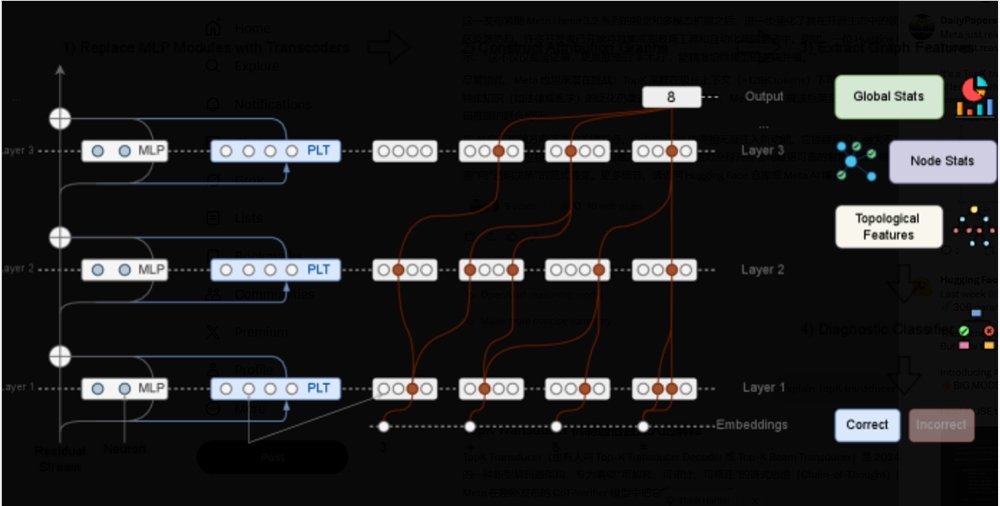

In current artificial intelligence research, CoT verification methods mainly rely on black-box approaches of model outputs or gray-box analysis through activation signals to predict the correctness of reasoning. Although these methods have some practical value, they lack a deep understanding of the root causes of reasoning failures. To address this issue, the research team introduced the CRV method, which posits that the attribution graphs of different reasoning steps—also known as the execution traces of the model's underlying reasoning circuits—exhibit significant structural differences.

Studies show that the attribution graphs of correct reasoning steps differ significantly from those of incorrect steps in structure. This structural difference provides new scientific evidence for predicting reasoning errors. By training a classifier to analyze these structural features, researchers have demonstrated that the structural features of errors are highly predictive, further validating the feasibility of directly evaluating the correctness of reasoning through computational graphs.

Moreover, the study found that these structural features exhibit high domain specificity across different reasoning tasks. This means that different types of reasoning failures reflect distinct computational patterns, offering new directions for future research. Notably, the research team successfully implemented targeted interventions on model features through in-depth analysis of attribution graphs, thereby correcting some reasoning errors.

This research provides a deeper causal understanding of the reasoning process in large language models, marking an important step forward from simple error detection toward a more comprehensive model understanding. Researchers hope that by carefully examining the model's computational process, they can effectively improve the reasoning capabilities of LLMs in the future and lay a theoretical foundation for more complex artificial intelligence systems.