Recently, Amazon Web Services (AWS) launched a new feature that allows users to easily deploy the open-source GPT-OSS model through Amazon Bedrock Custom Model Import.

This new feature supports GPT-OSS variants with 2 billion and 12 billion parameters, helping enterprises migrate existing applications to the AWS platform while maintaining API compatibility. With this feature, users only need to upload model files to Amazon S3, then initiate the import through the Amazon Bedrock console. AWS will automatically handle GPU configuration, inference server setup, and on-demand auto-scaling, allowing users to focus on application development.

The GPT-OSS models are among the first open-source language models introduced by OpenAI, suitable for various applications including reasoning and tool usage. Users can choose the appropriate model based on their needs. The GPT-OSS-20B is ideal for scenarios where speed and efficiency are critical, while the GPT-OSS-120B is better suited for complex reasoning tasks. Both models use a mixture of experts architecture, activating only the most relevant model components when needed, ensuring efficient performance.

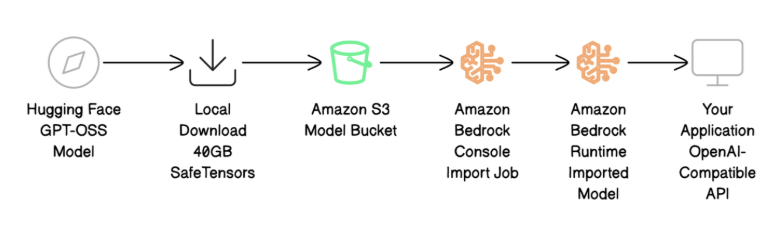

The deployment process includes four main steps: downloading and preparing the model files, uploading the files to Amazon S3, importing the model using Amazon Bedrock, and testing the model via an OpenAI-compatible API. Users must ensure they have an AWS account with appropriate permissions and create an S3 bucket in the target region. After completing the model import, users can test the model using the familiar OpenAI chat completion API format to ensure it runs properly. Additionally, the migration process involves minimal code changes, requiring only a change in the calling method, while the message structure remains unchanged.

While using the new feature, AWS also recommends following some best practices, such as file validation and security settings, to ensure a smooth model deployment. AWS will continue to expand the regional support for the Bedrock service to meet the needs of more users.

Key Points:

🌟 AWS introduced the Amazon Bedrock Custom Model Import feature, enabling easy deployment of GPT-OSS models.

💡 Users just need to upload model files, and AWS will automatically handle infrastructure configuration and scaling.

🔄 Migrating to the AWS platform is simple, with API compatibility ensuring a seamless transition for existing applications.