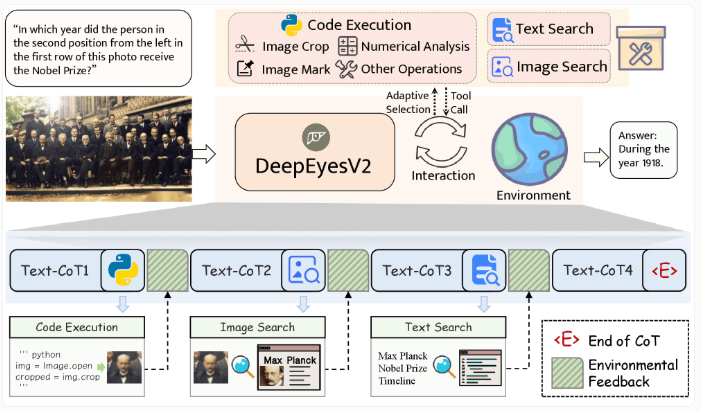

Recently, Chinese researchers have launched a multimodal artificial intelligence model called DeepEyesV2, which can analyze images, execute code, and perform web searches. Unlike traditional models that rely on knowledge acquired during training, DeepEyesV2 excels by intelligently utilizing external tools, often surpassing larger models in many cases.

In early experiments, the research team found that relying solely on reinforcement learning was insufficient to consistently use tools for multimodal tasks. Initially, the model attempted to write Python code for image analysis, but often generated incorrect code snippets. As training progressed, the model even began to completely skip using tools.

To address this issue, the research team developed a two-stage training process. In the first stage, the model learned to combine image understanding with tool usage; in the second stage, reinforcement learning was used to optimize these behaviors. By using leading models to generate high-quality examples, researchers ensured the accuracy and clarity of the tool usage paths.

DeepEyesV2 uses three categories of tools to handle multimodal tasks: code execution for image processing and numerical analysis, image search for retrieving similar content, and text search to provide contextual information not visible in the image. The model adapts to different queries by integrating image manipulation, Python execution, and image/text search.

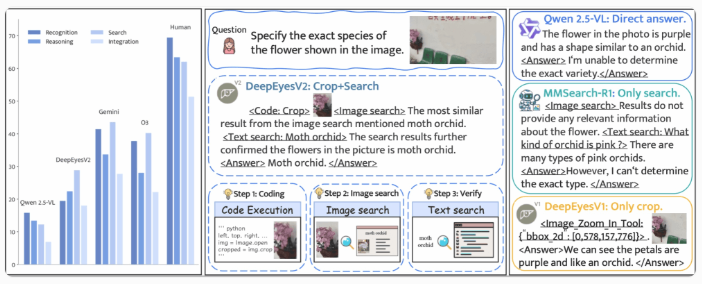

To evaluate this approach, the research team created the RealX-Bench benchmark to test the model's ability to coordinate visual understanding, web searching, and reasoning. The study showed that even the most powerful proprietary models achieved an accuracy of only 46%, while humans reached 70% accuracy. In tasks requiring the simultaneous use of three skills, current models performed poorly.

DeepEyesV2 performed well on multiple benchmark tests, achieving an accuracy of 52.7% in mathematical reasoning tasks and 63.7% in search-driven tasks. This demonstrates that the limitations of smaller models can be compensated for through carefully designed tool usage.

DeepEyesV2 is now available on Hugging Face and GitHub under the Apache License 2.0, allowing commercial use, and continues to drive the development of multimodal AI.

Paper: https://arxiv.org/abs/2511.05271

Key Points:

🌟 DeepEyesV2 enhances performance in multimodal tasks by using intelligent tools, surpassing large models.

🔧 Adopted a two-stage training process, combining image understanding with tool usage.

📈 Performed well on multiple benchmarks, demonstrating the potential of smaller models.