Recently, the AI research team at Mooley has released its latest research findings at the international top academic conference AAAI2026, proposing an innovative framework called URPO (Unified Reward & Policy Optimization). This technology aims to simplify the training process of large language models and break through their performance bottlenecks, bringing new technical paths to the field of AI.

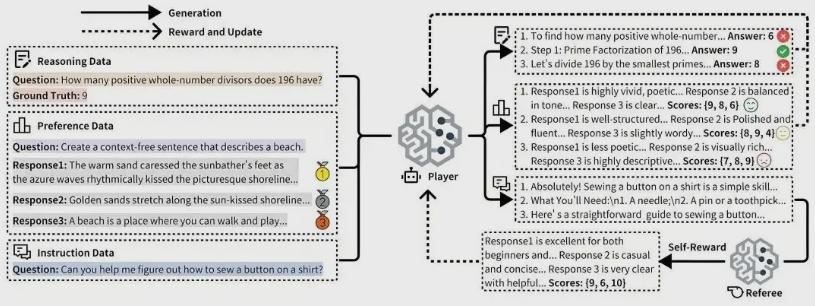

In the paper titled "URPO: A Unified Reward & Policy Optimization Framework for Large Language Models," the research team reshaped traditional "large model training" methods. The unique aspect of the URPO framework is that it combines the two roles of "instruction following" and "reward evaluation" into one, allowing a single model to achieve simultaneous optimization during the training phase. This means the model not only understands instructions but can also score itself, thereby improving the efficiency and effectiveness of training.

The URPO framework has overcome current challenges in three key technologies. First, data format unification: the research team successfully converted different types of data (such as preference data, verifiable reasoning data, and open-ended instruction data) into a unified signal format suitable for GRPO training. Second, through a self-reward loop, the model can autonomously score after generating multiple candidate answers, using the results as reward signals for GRPO training, thus forming an efficient self-improvement cycle. Finally, the collaborative evolution mechanism enhances both the generation and evaluation capabilities of the model by processing the three types of data together.

Experimental results show that the URPO framework based on the Qwen2.5-7B model outperforms traditional baselines that rely on independent reward models in multiple performance metrics. For example, it scored 44.84 on the AlpacaEval instruction following leaderboard, and the average score in comprehensive reasoning ability tests increased from 32.66 to 35.66. At the same time, the model achieved a high score of 85.15 on the RewardBench reward model evaluation, surpassing the 83.55 score of specialized reward models, fully demonstrating the superiority of URPO.

Notably, Mooley has already achieved efficient operation of the URPO framework on its self-developed computing cards and completed deep integration with the mainstream reinforcement learning framework VERL. This breakthrough not only marks Mooley's leading position in the field of large model training but also points the way for future AI development.