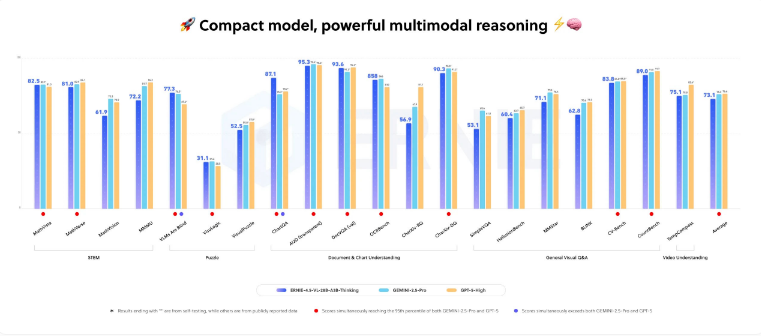

Baidu has recently launched its latest multimodal artificial intelligence model - ERNIE-4.5-VL-28B-A3B-Thinking, a new type of AI model that deeply integrates images into the reasoning process. Baidu claims that this model performs well in multiple multimodal benchmark tests, occasionally surpassing top commercial models such as Google's Gemini 2.5 Pro and OpenAI's GPT-5 High.

Combining Lightweight and High Performance

Although the total number of parameters in this model is 28 billion, due to the use of a routing architecture, it only uses 3 billion active parameters for reasoning. With this efficient architecture, ERNIE-4.5-VL-28B-A3B-Thinking can run on a single device equipped with 80GB GPU (such as the Nvidia A100). Baidu has released the model under the Apache 2.0 license, allowing it to be used for commercial projects free of charge. However, the performance claimed by Baidu has not yet been independently verified.

Core Capabilities: "Image Thinking" and Precise Localization

The standout feature of this model is its **"Image Thinking"** function, which allows it to dynamically process images during reasoning to highlight key details. For example, the model can automatically zoom in on blue signs in an image and accurately identify the text on them, effectively using an image editing tool internally.

Other tests have demonstrated its strong multimodal capabilities:

It can precisely locate people in images and return their coordinates.

It can solve complex mathematical problems by analyzing circuit diagrams.

It can recommend the best time for sightseeing based on chart data.

For video input, it can extract subtitles and match scenes with specific timestamps.

It can access external tools, such as web-based image searches, to identify unfamiliar organisms.