Recently, the NVIDIA research team released a new OmniVinci multimodal understanding model. The model has shown excellent performance in multiple multimodal understanding benchmark tests, surpassing the current top models by 19.05 points. More impressively, during training, OmniVinci used only 0.2 trillion training tokens, which is six times more data-efficient than its competitors' 1.2 trillion.

The core goal of OmniVinci is to create an artificial intelligence system that can simultaneously understand visual, audio, and text information, allowing machines to perceive and understand complex worlds like humans through different senses. To achieve this goal, the research team did not simply increase the amount of data but successfully achieved dual improvements in performance and efficiency through innovative network architectures and data management strategies.

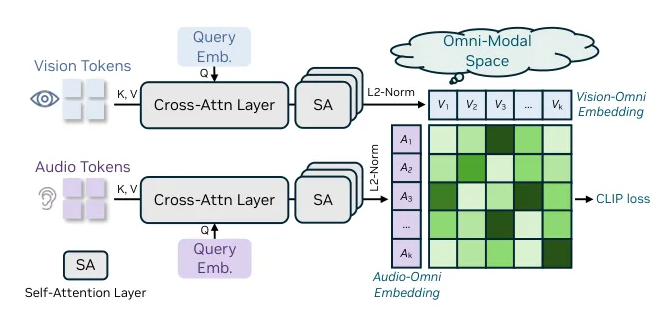

In terms of design, OmniVinci adopts the concept of a full-modal latent space, aiming to integrate information from different senses to achieve cross-modal understanding and reasoning. The research team found that different modalities can reinforce each other in perception and reasoning, providing a direction for the construction of multimodal AI systems.

OmniVinci's architecture design has a compositional cross-modal understanding capability, integrating heterogeneous inputs such as images, videos, audio, and text. Through a unified full-modal alignment mechanism, the model can integrate embedded information from different modalities into a latent space and then input it into a large language model (LLM). This mechanism includes three key technologies, where the OmniAlignNet module effectively aligns visual and audio information, while temporal embedding grouping and constrained rotational temporal embedding enhance the model's understanding of temporal information.

To cultivate OmniVinci's full-modal understanding capabilities, the research team adopted a two-stage training approach. First, they conducted modality-specific training, followed by full-modal joint training, using implicit and explicit learning data to significantly improve the model's joint understanding capabilities.

With the release of OmniVinci, NVIDIA once again demonstrates its technological innovation in the field of artificial intelligence, indicating that future AI systems will be smarter and more flexible.

github: https://github.com/NVlabs/OmniVinci

Key Points:

🌟 The OmniVinci model surpassed top models by 19.05 points in multimodal understanding benchmark tests.

📊 The training data volume is only one-sixth of its competitors, with data efficiency increased sixfold.

🔑 It adopts an innovative architecture and a two-stage training method, significantly enhancing the model's multimodal understanding capabilities.