Amid the intense competition in AI large models, efficient inference and long context processing have become pain points for developers. Recently, the BaiLing large model team under Ant Group has officially open-sourced Ring-flash-linear-2.0-128K, an innovative model designed specifically for ultra-long text programming. The model is based on a hybrid linear attention mechanism and a sparse MoE architecture, achieving performance comparable to 40B dense models with only 6.1B parameters activated, achieving SOTA (state-of-the-art) results in areas such as code generation and intelligent agents. AIbase has independently analyzed its groundbreaking highlights based on the official release from Hugging Face and technical reports, helping developers embrace the new era of "efficient AI programming."

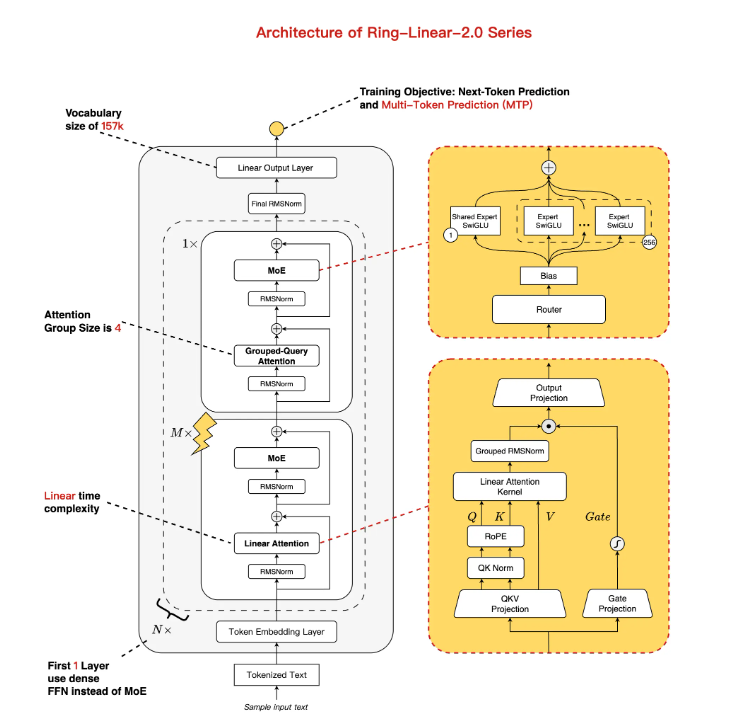

Innovative Architecture: Hybrid Linear + Standard Attention, MoE Optimization for Performance and Efficiency

Ring-flash-linear-2.0-128K is an upgraded version of Ling-flash-base-2.0, with a total parameter scale of 104B. Through optimizations such as a 1/32 expert activation ratio and multi-task processing layers (MTP), only 6.1B parameters are activated (4.8B excluding embeddings), achieving near-linear time complexity and constant space complexity. The core highlight lies in the hybrid attention mechanism: the main body uses a self-developed linear attention fusion module, supplemented by a small amount of standard attention, specifically designed to improve efficiency for long sequence calculations. Compared to traditional models, this architecture supports a generation speed of over 200 tokens per second at 128K context on H20 hardware, with more than three times the speed for daily use, perfectly suitable for resource-limited scenarios.

Training Upgrade: 1T Token Additional Fine-tuning + RL Stability, Significant Improvement in Complex Reasoning Capabilities

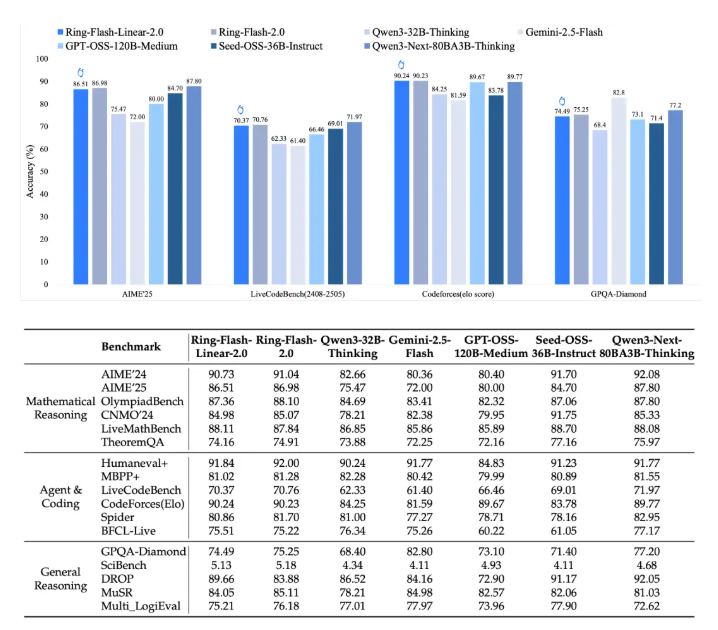

The model is derived from Ling-flash-base-2.0, further trained on an additional high-quality dataset of 1T tokens, combined with stable supervised fine-tuning (SFT) and multi-stage reinforcement learning (RL), overcoming the instability issues in MoE long-chain reasoning training. Thanks to Ant's self-developed "Icepop" algorithm, the model demonstrates outstanding stability in challenging tasks: it achieved an impressive score of 86.98 in the AIME2025 math competition, a CodeForces programming Elo rating of 90.23, and exceeded 40B dense models (such as Qwen3-32B) in logical reasoning and creative writing v3. Benchmark tests show that it not only matches standard attention models (such as Ring-flash-2.0) but also leads in several open-source MoE/Dense models.

Long Context Black Tech: Native 128K + YaRN Expansion to 512K, Zero Lag for Long Input and Output

Targeting programming pain points, Ring-flash-linear-2.0-128K natively supports a 128K context window, allowing developers to easily expand to 512K using YaRN extrapolation technology. In long-form input/output scenarios, the throughput during the prefill phase is nearly five times higher than Qwen3-32B, and the decoding phase achieves ten times acceleration. Testing shows that in 32K+ context programming tasks, the model maintains high accuracy without issues like "model leakage" or a floating sensation, making it especially suitable for complex scenarios such as front-end development, structured code generation, and agent simulation.

Open Source Ready: Dual Platform Deployment on Hugging Face and ModelScope, Zero-Barrier Onboarding Guide

To accelerate community adoption, the BaiLing team has synchronized the model weights to Hugging Face and ModelScope, supporting BF16/FP8 formats. After installing dependencies, you can load it with one click through Transformers, SGLang, or vLLM frameworks:

- Hugging Face example: pip install flash-linear-attention==0.3.2 transformers==4.56.1, then directly generate long code prompts after loading.

- vLLM online inference: with tensor-parallel-size 4, GPU utilization reaches 90%, supporting API calls.

Technical reports are available at arXiv (https://arxiv.org/abs/2510.19338). Developers can immediately download and experience it.

The Era of MoE Linear Attention Has Begun, Ant's BaiLing Leads the Efficient Programming AI

This open source marks a new breakthrough for Ant's BaiLing on the "MoE + Long Reasoning Chain + RL" path, with efficiency improvements of more than seven times from the Ling2.0 series to Ring-linear. AIbase believes that in the wave of long-text reasoning with only 1/10 the cost, this model will reshape the developer ecosystem: coding novices can instantly generate complex scripts, agent systems become smarter, and enterprise applications can be deployed effortlessly. Looking ahead, with the upcoming Ring-1T trillion-level flagship, domestic MoE may dominate the global efficient AI race.

Conclusion