Recently, the AI startup Thinking Machine released a breakthrough training method called On-Policy Distillation, which boosts the training efficiency of small models on specific tasks by up to 50 to 100 times. The achievement immediately attracted the attention of Mira Murati, former Chief Technology Officer of OpenAI, who personally shared it, sparking great interest from both academia and industry.

Combining Reinforcement Learning with Supervised Learning to Create a New "AI Coach" Model

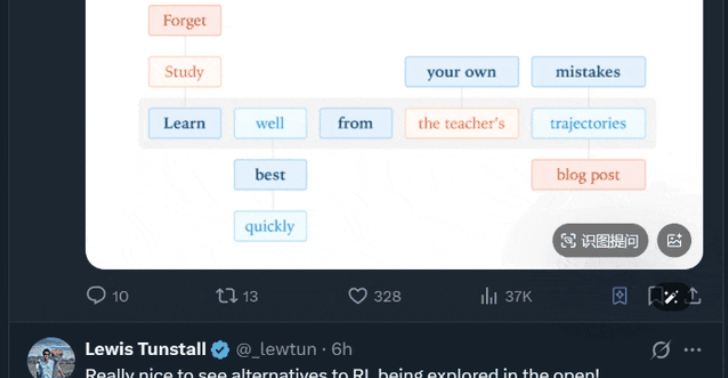

Traditional AI training has long faced a dilemma: reinforcement learning allows models to explore independently through trial and error, offering flexibility but low efficiency; supervised fine-tuning provides direct answers, being efficient but rigid. On-Policy Distillation cleverly integrates the two—like equipping a student model with a "real-time coach." While the student generates content autonomously, a powerful teacher model dynamically evaluates and guides each step, achieving precise and stable knowledge transfer by minimizing the KL divergence between the two.

This mechanism not only avoids the drawbacks of traditional distillation, which focuses only on results and not the process, but also effectively prevents the model from taking shortcuts or overfitting, significantly improving its generalization ability.

Test Results Are Amazing: 7-10 Times Fewer Steps, 100 Times Efficiency Improvement

In mathematical reasoning tasks, the research team achieved performance levels close to those of a 32B large model using only 1/7 to 1/10 of the training steps required by the original reinforcement learning method, reducing overall computational costs by as much as two orders of magnitude. This means that small and medium enterprises or research teams with limited resources can now efficiently train professional models comparable to those of major companies.

More importantly, this method successfully solved the "catastrophic forgetting" problem in enterprise AI deployment. In an enterprise assistant experiment, the model retained its original conversation and tool calling capabilities while learning new business knowledge—providing a feasible path for continuously iterative industry AI systems.

Strong Background of Core Team, Technology Derived from Practical Experience at OpenAI

The research was led by Kevin Lu, who previously headed several key projects at OpenAI. Now a core member of Thinking Machine, he is bringing advanced experience in large model training back to the efficient small model ecosystem. The team believes that in today's era of AI moving toward verticalization and scenario-based applications, "small but specialized" models are the main force for commercial implementation, and On-Policy Distillation is the key engine that opens this path.

As computing power bottlenecks become more evident, the industry is shifting from the "only large models" paradigm to a new "efficient intelligence" model. Thinking Machine's breakthrough not only significantly lowers the barrier to AI development but also signals that an era of high-cost-performance professional models is accelerating.

Paper: https://thinkingmachines.ai/blog/on-policy-distillation/