A new breakthrough technology has attracted widespread attention in the field of artificial intelligence image editing and generation. The DreamOmni2 system, jointly developed by ByteDance and the Chinese University of Hong Kong, the Hong Kong University of Science and Technology, and the University of Hong Kong, has been officially open-sourced, marking the latest development in image editing and generation technology.

The release of DreamOmni2 aims to enhance the ability of artificial intelligence to follow instructions in image processing, achieving true multimodal instruction understanding. This system can simultaneously understand text instructions and reference images, significantly improving the limitations of previous models in handling abstract concepts (such as style, material, and lighting). Interaction between users and AI has become more natural, as if talking to a partner who understands one's intentions.

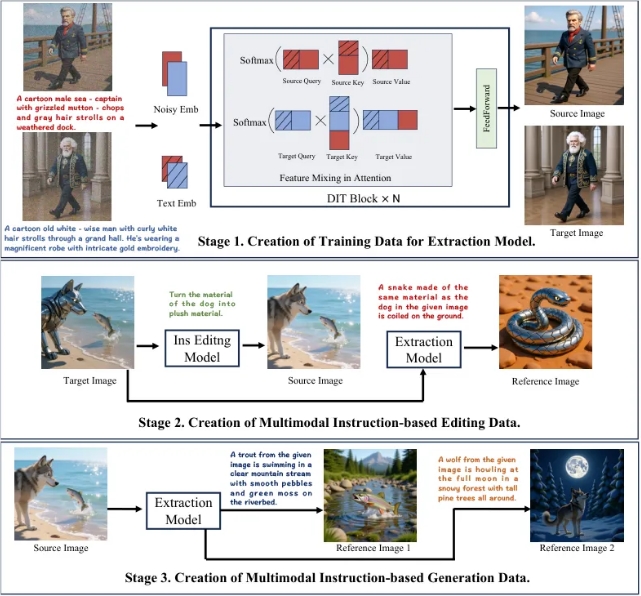

To train AI to understand complex text and image instructions, the DreamOmni2 development team has created an innovative three-stage process. First, through training extraction models, AI can accurately extract specific elements or abstract properties from images. Then, using the extraction model to generate multimodal instruction editing data, forming training samples that include source images, instructions, reference images, and target images. Finally, by further extracting and combining to generate more reference images, a rich multimodal instruction generation dataset is built. These steps have laid a solid foundation for the high-quality training of the system.

In terms of model architecture, DreamOmni2 proposed an index encoding and position encoding offset scheme, ensuring the model can accurately identify multiple input images. At the same time, the introduction of a visual language model (VLM) effectively bridges the gap between user instructions and model understanding. This innovative design improves the accuracy of the system when processing instructions, enabling it to better understand the user's true intention.

Testing has shown that DreamOmni2 outperforms all open-source models compared in multimodal instruction editing tasks, approaching top commercial models. Compared with traditional commercial models, DreamOmni2 can provide higher accuracy and consistency when handling complex instructions, avoiding unnecessary changes and image defects.

The open-sourcing of DreamOmni2 not only provides new possibilities for AI creation but also offers researchers in related fields a unified evaluation standard. The release of this technology heralds a new revolution in the field of AI image editing and generation. Industry experts have stated that the success of DreamOmni2 will greatly promote the popularization and application of AI technology.