Recently, the AI company Anthropic confirmed that the Claude series models experienced some issues with response quality in recent days, but these have been successfully resolved. The company emphasized that the issue was not due to demand or cost factors, but rather an unexpected situation.

According to reports, this issue was related to two technical failures. The first failure occurred between August 5th and September 4th, mainly affecting a small number of Claude Sonnet4 requests. Although the problem expanded after August 29th, fortunately, the Anthropic team quickly took action, identified the issue, and initiated repairs. The second failure occurred between August 26th and September 5th, affecting some requests for Claude Haiku3.5 and Claude Sonnet4.

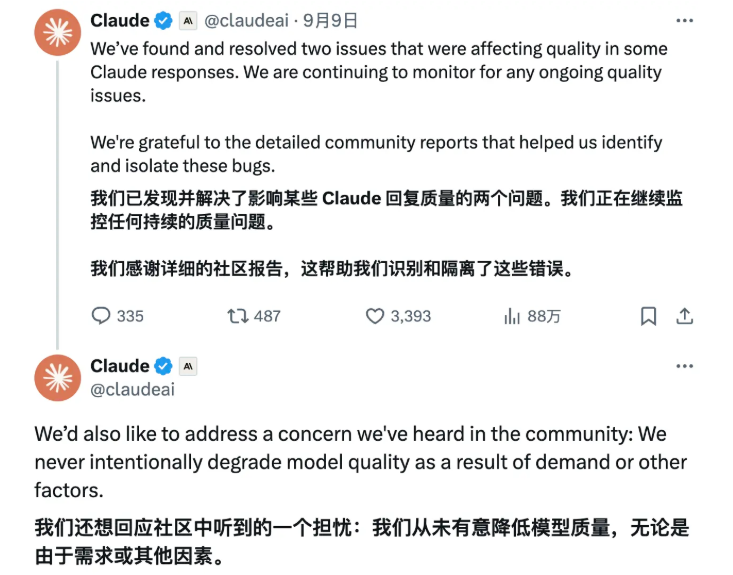

Anthropic stated that all affected models have now fully returned to normal, and they are continuously monitoring the quality of all models, including the latest Claude Opus4.1. The company also specifically mentioned that detailed feedback from community users played a key role in quickly identifying and isolating the faults, reflecting Anthropic's emphasis on user opinions.

In the official statement, Anthropic also clarified the doubts from the outside world regarding the decline in model quality, reiterating that the quality issues were absolutely not for "reducing intelligence to save costs." This incident affected multiple service platforms, including claude.ai, console.anthropic.com, and api.anthropic.com. Despite the challenges, Anthropic remains committed to improving user experience and maintaining good communication with users.

With the resolution of the issue, the Claude series models are returning to the right track, which means users can continue to rely on these powerful AI tools for tasks such as programming and data processing. Anthropic's handling of this incident demonstrates its high regard for technical quality and sense of responsibility. In the future, they will work even harder to ensure the stability and efficiency of their services.