Recently, Sun Yat-sen University, Pengcheng Laboratory, and Meituan jointly released a new multi-modal large model for image segmentation called X-SAM, marking a significant advancement in image segmentation technology. The emergence of this model not only improves the accuracy of image segmentation but also achieves a major leap from "segmenting everything" to "arbitrary segmentation."

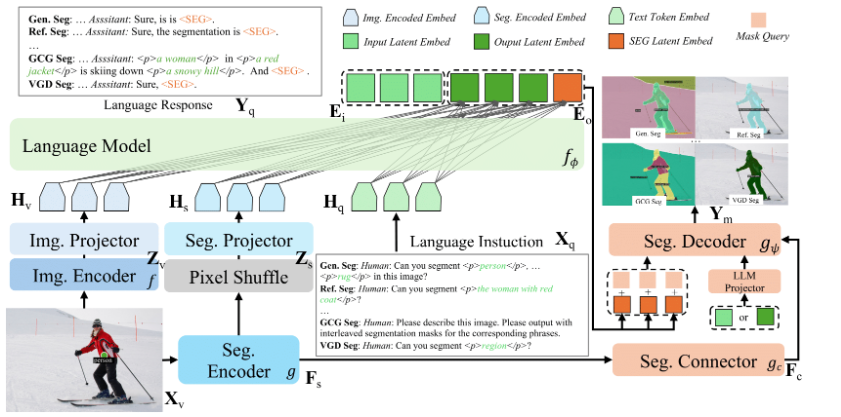

The core of X-SAM lies in its innovative design philosophy. It first introduces a unified input and output format to adapt to different segmentation needs. Users can operate through text queries or visual queries. The former is suitable for general segmentation tasks, while the latter enables interactive segmentation through visual cues such as points or doodles. In addition, X-SAM uses a unified representation for outputs, making the segmentation results interpretable effectively.

To improve segmentation performance, X-SAM adopts a dual-encoder architecture. One encoder is responsible for extracting global features, while the other focuses on fine-grained features. This design not only enhances the model's ability to understand images but also ensures precise segmentation. At the same time, the model introduces a segmentation connector and a unified segmentation decoder. The former handles multi-scale features, while the latter replaces the traditional decoder architecture, further improving segmentation performance.

The training process of X-SAM consists of three stages. The first stage is segmentation refinement, aimed at enhancing the model's basic segmentation capability. The second stage is alignment pre-training, aligning language and visual embeddings. The third stage is hybrid fine-tuning, optimizing the overall performance of the model through collaborative training on multiple datasets. Experimental results show that X-SAM achieves state-of-the-art performance on more than 20 segmentation datasets, demonstrating its outstanding multi-modal visual understanding capabilities.

With the release of X-SAM, the research team hopes to expand its application to the video domain in the future, combining temporal information to promote the development of video understanding technology. The success of this new model not only opens up new directions for image segmentation research but also lays the foundation for building more general visual understanding systems.

Code: https://github.com/wanghao9610/X-SAM

Demo: https://47.115.200.157:7861

Key Points:

🌟 The X-SAM model has achieved a major leap from "segmenting everything" to "arbitrary segmentation," improving the accuracy and application scope of image segmentation.

💡 The model introduces a unified input-output format, supporting text and visual queries, enhancing user interaction experience.

🚀 After three-stage training, X-SAM achieves state-of-the-art performance on more than 20 datasets, laying the foundation for future visual understanding systems.