Google has recently released an innovative framework called BlenderFusion, aimed at enhancing 3D visual editing and generation synthesis capabilities. This new tool addresses the current demands in the image generation field, especially in handling complex scenes. Traditional generative adversarial networks and diffusion models perform well in generating overall images, but they are relatively limited in precise control over multiple visual elements. The emergence of BlenderFusion provides a new solution to this challenge.

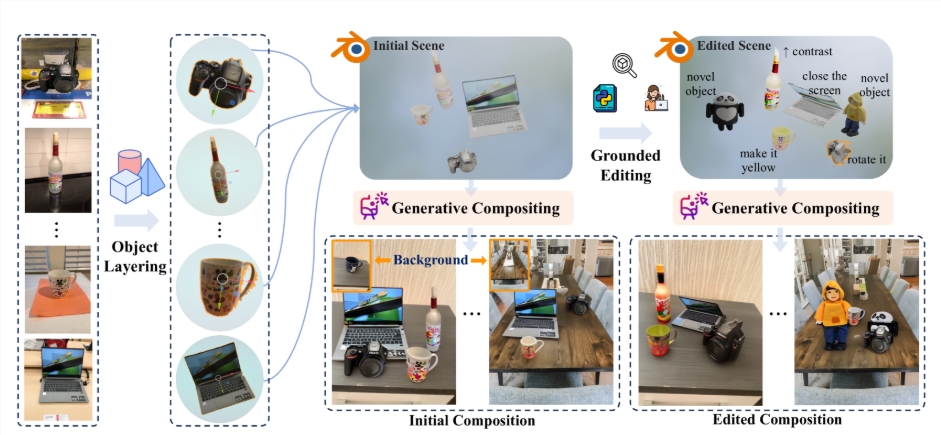

BlenderFusion's workflow is mainly divided into three stages: layering, editing, and compositing. First, in the layering stage, BlenderFusion extracts editable 3D objects from the input 2D image. Researchers used various cutting-edge vision foundation models, such as SAM2 and DepthPro, to more accurately identify objects in the image. Through these models, the system can generate 3D point clouds of objects, laying the foundation for subsequent editing.

Next, the editing stage fully leverages the power of Blender. Users can perform various operations on imported 3D entities, such as moving, rotating, and scaling, and even make fine adjustments to the appearance and materials of objects. This process allows users to flexibly handle each element in the scene, ensuring that each edit is efficiently reflected in the final rendering.

In the compositing stage, BlenderFusion merges the edited 3D scene with the background to generate the final image. This stage relies on a powerful generative compositor that can process detailed information from different inputs, ensuring high-quality and coherent final results. The research team optimized existing diffusion models to effectively integrate editing and source scene information, thereby improving the compositing results.

Through BlenderFusion, Google not only demonstrates its technological innovation in the field of 3D visual editing, but also provides users with a new creative tool, making complex visual tasks more intuitive and efficient. The release of this framework is sure to bring convenience to the work of designers and creators.

Project: https://blenderfusion.github.io/

Key Points:

🌟 BlenderFusion integrates advanced 3D editing tools with diffusion models, achieving efficient 3D visual editing and generation compositing.

🛠️ The framework's workflow includes three stages: layering, editing, and compositing, allowing users to easily edit 3D objects and generate final images.

📈 Google's BlenderFusion enhances the ability to handle complex scenes through model optimization, helping designers realize their creativity.