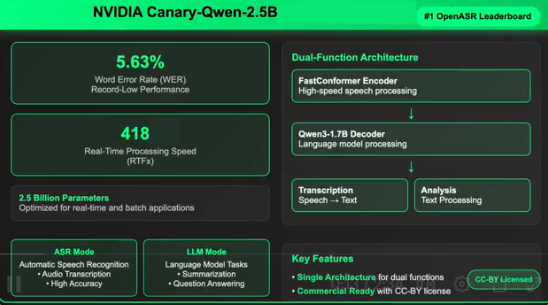

NVIDIA has just released Canary-Qwen-2.5B, a groundbreaking hybrid model combining automatic speech recognition (ASR) and a large language model (LLM), achieving an unprecedented word error rate (WER) of 5.63% and topping the Hugging Face OpenASR leaderboard. The model is licensed under CC-BY, offering both commercial licensing and open-source features, thereby removing barriers for enterprise-level speech AI development.

Technical Breakthrough: Unified Speech Understanding and Language Processing

This release marks a significant technical milestone, as Canary-Qwen-2.5B integrates transcription and language understanding into a single model architecture, enabling downstream tasks such as summarization and question-answering directly from audio. This innovative architecture completely transforms traditional ASR processes, integrating transcription and post-processing into a unified workflow.

Key Performance Metrics

The model has set new records across multiple dimensions:

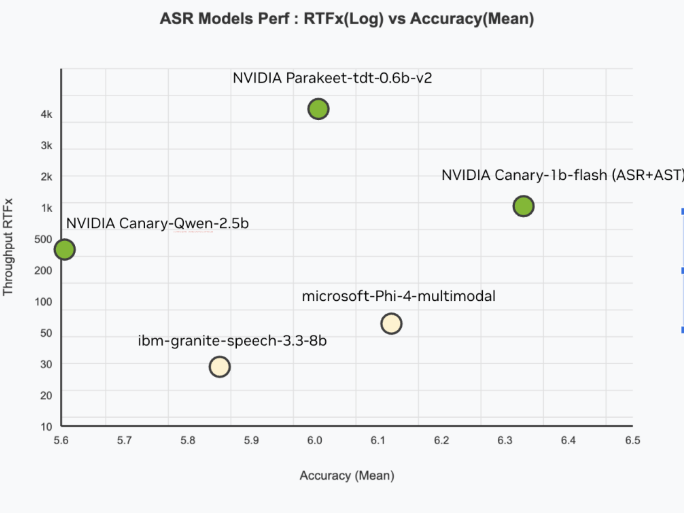

- Accuracy: 5.63% WER, the lowest on the Hugging Face OpenASR leaderboard

- Speed: RTFx of 418, processing audio 418 times faster than real-time

- Efficiency: Only 2.5 billion parameters, more compact compared to larger models with inferior performance

- Training Scale: Based on a diverse English speech dataset of 234,000 hours

Innovative Hybrid Architecture Design

The core innovation of Canary-Qwen-2.5B lies in its hybrid architecture, consisting of two key components:

FastConformer encoder is specifically designed for low-latency and high-accuracy transcription, while the Qwen3-1.7B LLM decoder is an unmodified pre-trained large language model that receives audio transcription tokens through an adapter.

This adapter design ensures modularity, allowing the Canary encoder to be separated and the Qwen3-1.7B to run as an independent LLM for text-based tasks. A single deployment can handle downstream language tasks for both spoken and written inputs, enhancing multimodal flexibility.

Enterprise-Level Application Value

Differing from many research models constrained by non-commercial licenses, Canary-Qwen-2.5B is released under the CC-BY license, opening up extensive commercial application scenarios:

- Enterprise transcription services

- Knowledge extraction from audio

- Real-time meeting summaries

- Speech-controlled AI agents

- Regulation-compliant document processing (healthcare, legal, finance)

The model's LLM-aware decoding capabilities also enhance punctuation, capitalization, and contextual accuracy, which are often weak points in traditional ASR outputs.

Hardware Compatibility and Deployment Flexibility

Canary-Qwen-2.5B is optimized for various NVIDIA GPUs, supporting hardware ranging from data center A100 and H100 to workstation RTX PRO6000, and even consumer-grade GeForce RTX 5090. This cross-hardware scalability makes it suitable for cloud inference and internal edge workloads.

Open Source Driving Industry Development

By open-sourcing the model and its training procedures, the NVIDIA research team aims to promote community-driven advancements in speech AI. Developers can mix and match other NeMo-compatible encoders and LLMs to create custom hybrid models for new domains or languages.

This version also pioneers a new era for LLM-centric ASR, where the LLM is no longer a post-processor but a core agent integrated into the speech-to-text process. This approach reflects a broader trend towards agent models—systems capable of comprehensive understanding and decision-making based on real-world multimodal inputs.

NVIDIA's Canary-Qwen-2.5B is not just an ASR model, but a blueprint for integrating speech understanding with general-purpose language models. With SoTA performance, commercial availability, and open innovation pathways, this version is expected to become a foundational tool for enterprises, developers, and researchers unlocking next-generation speech-first AI applications.