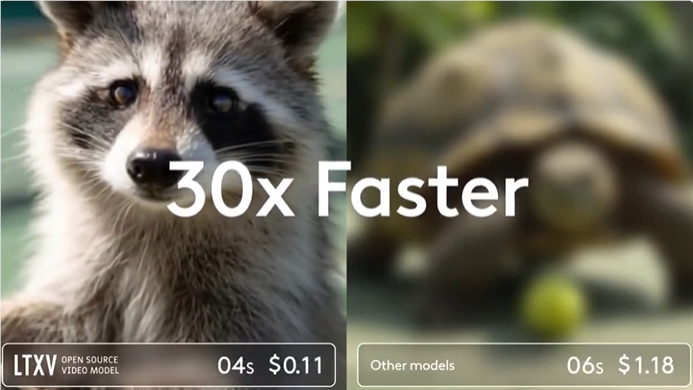

Artificial intelligence video generation technology has achieved another major breakthrough! Recently, Lightricks' LTX Studio released its latest open-source video generation model, LTX-Video13B. With 13 billion parameters, 30 times the generation speed of similar models, and innovative multi-scale rendering technology, it has quickly become a focus in the industry. The model not only runs efficiently on consumer-grade GPUs but also significantly improves video coherence and detail representation, offering creators unprecedented control and flexibility.

Technical Breakthrough: Multi-Scale Rendering Redefines Video Generation

LTX-Video13B uses a unique multi-scale rendering technology, generating rough motion and scene layout at low resolution first, then gradually refining details with an innovative approach. This technology significantly improves generation speed and video quality. The model can generate videos 30 times faster than similar models while maintaining high-quality output, rendering a 5-second video in just 2 seconds, and running smoothly on consumer-grade GPUs like NVIDIA RTX4090. Compared to traditional models, it requires less memory, providing creators with a more efficient creative experience.

The model is based on the DiT (Diffusion Transformer) architecture, combined with advanced kernel optimization and bfloat16 data format, further enhancing performance. LTX-Video13B supports real-time generation at 1216×704 resolution and 30 frames per second, suitable for various generation modes such as text-to-video, image-to-video, and video-to-video, meeting diverse creative needs.

Powerful Features: Precise Control and Infinite Creativity

LTX-Video13B excels in action coherence, scene structure, and camera relationship understanding, capable of generating logically strong and detailed video content. The model supports keyframe control, character and camera movement, and multi-camera combinations, providing users with fine-grained creative control. For example, creators can precisely adjust character actions, scene transitions, or camera angles in videos through text prompts or reference images, achieving cinematic visual effects.

Additionally, LTX-Video13B supports video extension and style/action replacement features. Users can extend existing videos up to 60 seconds long or apply stylistic transformations, such as converting real scenes into animation styles. This flexibility makes it highly applicable in short film production, advertising creativity, and social media content creation.

Open Source Ecosystem: Empowering Developers and Creators

As an open-source model, LTX-Video13B is freely available on GitHub and Hugging Face, allowing developers and creators to modify and customize it freely. Lightricks also provides the LTX-Video-Trainer tool, supporting full fine-tuning of 2B and 13B models, as well as LoRA (low-rank adaptation) training, making it easy for users to develop customized control models such as depth, pose, or edge detection. The model is compatible with ComfyUI workflows, and the new Looping Sampler node supports generating videos of any length, ensuring motion consistency.

To further lower the barrier to entry, Lightricks has released a series of auxiliary tools, including an 8-bit quantized version (ltxv-13b-fp8) and IC-LoRA Detailer, optimizing performance on low-memory devices. The model is freely available to startups and organizations with annual revenue below $10 million, reflecting Lightricks' commitment to democratizing AI.

New Milestone in Video Generation

AIbase believes that the release of LTX-Video13B marks a new height in open-source video generation technology. Its multi-scale rendering technology and optimization for consumer-grade hardware have broken the traditional demand for high-performance devices in AI video generation, providing professional-level creation tools for small and medium-sized teams and individual creators. Combined with collaboration from the open-source community, LTX-Video13B is expected to drive further innovation in video generation technology, with wide applications in film, gaming, advertising, and education.

Currently, LTX-Video13B is integrated into the LTX Studio platform, and users can access the model and documentation through the official website (https://ltx.studio) or GitHub (https://github.com/Lightricks/LTX-Video). Lightricks plans to continue optimizing the model, supporting more control types and multimodal functions, providing creators with more powerful tools.

Future Outlook: Opening a New Chapter in AI Video Creation