Microsoft quietly open-sourced a "dark horse" real-time speech model: VibeVoice-Realtime-0.5B. This might be one of the lowest-latency, most human-like open-source text-to-speech (TTS) models in the world today. Before you finish speaking, the voice has already started!

Ultra-realistic: Speak in 300ms

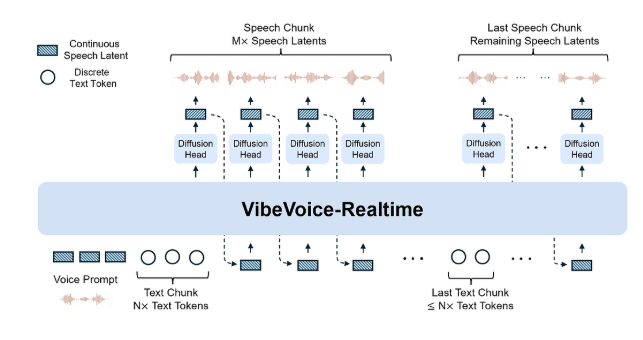

VibeVoice-Realtime-0.5B's most impressive feature is its "near-zero latency." It takes an average of just 300 milliseconds from input text to the first sound, far less than the 1-3 seconds typically seen in traditional TTS. The experience is like real conversation: while you type, the other person starts responding immediately, with no lag or "wait for me to generate and play" feeling.

Not afraid of long texts: Generate 90 minutes of smooth audio at once

Don't let the 0.5B parameter count fool you—it can generate up to 90 minutes of continuous audio without slowing down, distorting, or repeating, with natural intonation that sounds like a professional announcer. It has already passed tests on HuggingFace, and netizens have even fed the entire first chapter of "The Three-Body Problem" into it, reading it through without any distortion.

Multi-character dialogue magic: Perfectly reproduce a 4-person podcast

The model natively supports up to four characters speaking simultaneously, with each character maintaining a distinct and stable voice, pace, and tone. For example, simulating a podcast interview scenario: the host is calm, guest A is excited, guest B is humorous, and guest C is slightly apologetic. When they take turns speaking, there is no cross-talk, and the emotional transitions are smooth—truly the "ceiling of AI voice acting groups."

Emotional expression at maximum: Automatically identify anger, excitement, and apology

Thanks to its built-in emotional perception module, VibeVoice can automatically add corresponding emotions based on the text's meaning:

- When seeing "I'm sorry," it naturally adds an apologetic tone.

- When encountering "That's great!" it instantly becomes excited.

- Even a sentence like "I'm very angry" will lower the pitch and increase the speaking speed.

No need for manual emotion tags—ready to use out of the box.

Supports both Chinese and English, with Chinese having room for improvement

The model supports mixed Chinese and English reading. Its English performance is close to commercial standards, and its Chinese pronunciation is accurate and natural, but there is still room for improvement in handling polyphonic characters and light tones. The official team mentioned that a specially tuned Chinese version will be released later.

Lightweight design: Easily fits into phones and edge devices

With only 0.5B parameters, the model uses less than 2GB of VRAM during inference and can run at full real-time speed on a regular laptop. Developers have already quickly integrated it into local AI assistants, reading apps, and real-time simultaneous interpretation tools. In the future, it may become the "standard for local AI voice."

VibeVoice-Realtime-0.5B is now fully open-sourced on HuggingFace and GitHub (MIT license), and it supports commercial use. The community has already created many demos: some people used it to create a "type and read" WeChat voice input tool, while others directly connected it to large models to achieve true end-to-end real-time voice conversation.

AIbase Report Comment:

While the open-source community was competing with 10B+ parameter TTS models, Microsoft surprised everyone by using a 0.5B model to bring real-time, natural, long-text, and multi-character features close to commercial level. This move is nothing short of a "downward strike." Next, we'll see how domestic tech giants respond.

Project Address: https://microsoft.github.io/VibeVoice/