Today, Meta AI Lab transformed Llama3.1 into a "X-ray machine" for reasoning—the new model CoT-Verifier is now available on Hugging Face, specifically dissecting the "circuit paths" of each step in chain-of-thought (CoT), making errors no longer hidden in the black box.

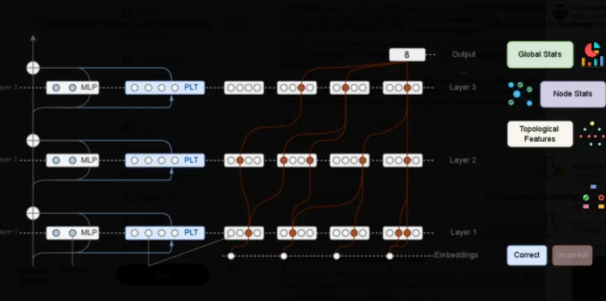

Traditional verification only checks whether the output is correct. Meta takes a different perspective: first, run a forward pass through the model, then extract the attribution graph for each step. The team found that the graph structures of correct and incorrect reasoning differ significantly, like two completely different circuit boards. Training a lightweight classifier on these "graph features" directly boosts the accuracy of predicting erroneous steps to SOTA, and each task (math, logic, common sense) has its own unique "fault signature," indicating that reasoning failures are not random noise but quantifiable and classifiable computational patterns.

More importantly, the attribution graph can not only "diagnose" but also "operate." In experiments, Meta performed targeted ablation or weight shifting on high-suspect nodes, successfully improving Llama3.1's accuracy on the MATH dataset by 4.2 percentage points without retraining the main model. In other words, CoT-Verifier turns reasoning error correction from "post-mortem analysis" into "intra-operative navigation."

The model is open source, with scripts that can be reproduced with one click. Developers just need to feed the CoT path to be verified into the Verifier, and they will get a "structural anomaly score" for each step, as well as locate the most likely faulty upstream node. At the end of the paper, Meta stated: the next step is to apply the same graph intervention approach to code generation and multimodal reasoning, making "white-box surgery" the new standard for LLMs.