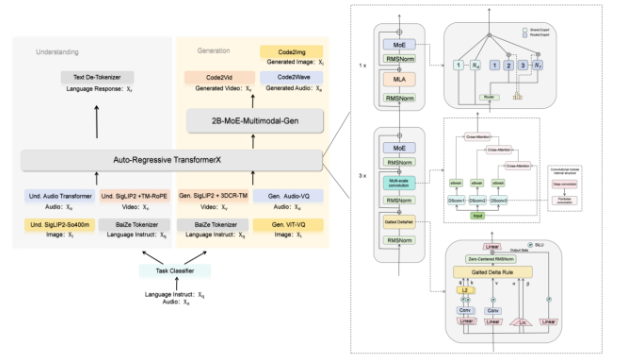

At the 2025 World Computing Conference, Kunlun Yuan AI officially launched the BaiZe-Omni-14b-a2b, a full-modal fusion model based on the Ascend platform. This new model has strong capabilities in text, audio, image, and video understanding and generation. It adopts an innovative technical architecture, including modal decoupling encoding, unified cross-modal fusion, and dual-branch functional design, aiming to promote the development of multi-modal applications.

The design process of BaiZe-Omni-14b-a2b is clear, covering steps such as input processing, modal adaptation, cross-modal fusion, core functions, and output decoding. To optimize computational efficiency, the model introduces a multi-linear attention layer and a single-layer hybrid attention aggregation layer within the MoE+TransformerX architecture, ensuring the smooth implementation of large-scale full-modal applications. In addition, the model's dual-branch design gives it significant advantages in both understanding and generation capabilities, allowing it to effectively handle up to 10 types of tasks and possess strong multi-modal content generation capabilities.

During the training process, Kunlun Yuan AI used rich and high-quality data. The training data covers over 3.57 trillion token text data, more than 300,000 hours of audio data, 400 million images, and more than 400,000 hours of video data, ensuring the purity of single-modal data and the alignment quality of cross-modal data. Through differentiated data ratios, the model achieves gradual performance improvements at different training stages.

In terms of performance, BaiZe-Omni-14b-a2b performs excellently in core indicators for multi-modal understanding, with a text comprehension accuracy rate of 89.3%. In long-sequence processing scenarios, the model's ROUGE-L score for the 32768-token text summary task is 0.521, significantly higher than the industry mainstream model GPT-4's 0.487. In addition, the model supports multi-language text generation and multi-modal generation of images, audio, and video, demonstrating its strong comprehensive capabilities.

Key Points:

🌐 ** Full-modal Capabilities **: BaiZe-Omni-14b-a2b has strong capabilities in text, audio, image, and video understanding and generation.

📈 ** Outstanding Performance **: The model performs well in text understanding and long-sequence processing, with a ROUGE-L score that significantly leads similar models.

💡 ** Multi-field Applications **: This model will provide technical support for multiple fields such as intelligent customer service and content creation, promoting the advancement of AI technology.