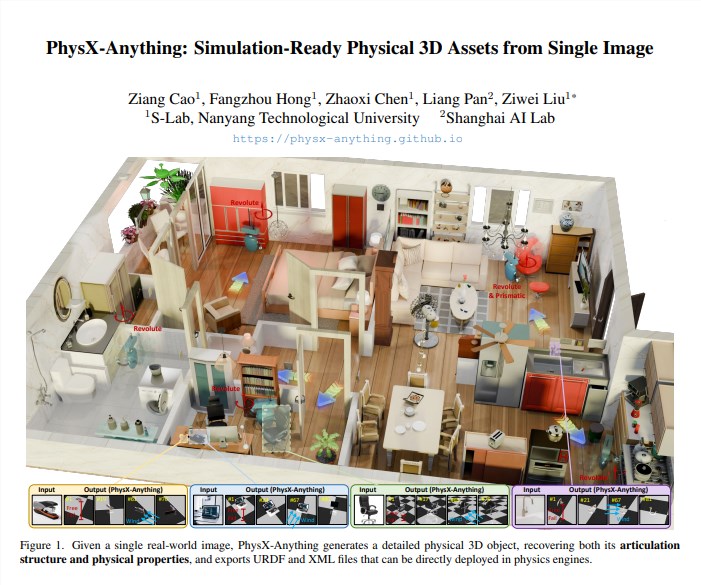

The Nanyang Technological University and the Shanghai Artificial Intelligence Lab jointly released the open-source framework PhysX-Anything, which can output a complete 3D asset with geometric, joint, and physical parameters from just one RGB image. It can be directly imported into MuJoCo and Isaac Sim for robot policy training.

Technical Highlights

1. Coarse-to-fine pipeline: First predict overall physical properties (mass, center of mass, friction coefficient), then refine geometry and joint limits at the component level, avoiding physical distortion caused by "visual priority".

2. Novel compressed 3D representation: Encode faces + joint axes + physical properties into an 8K-dimensional latent vector. During inference, decode once, achieving a 2.3x speed improvement over SOTA methods.

3. Explicit physical supervision: Add 120,000 sets of real physical measurements to the dataset. Introduce center of mass, inertia, and collision box loss during training to ensure simulation consistency.

Test Results

On the Geometry-Chamfer and Physics-Error metrics, PhysX-Anything reduced errors by 18% and 27%, respectively. The absolute scale error is less than 2cm, and the joint motion range error is less than 5°, significantly outperforming recent methods like ObjPhy and PhySG. In real-world scenarios (IKEA furniture, kitchen tools), after importing generated assets into Isaac Sim, the robot's grasping success rate increased by 12%, and the number of training steps decreased by 30%.

Open Source and Impact

The project is now available on GitHub, with weights, data, and evaluation benchmarks also openly accessible. The team plans to release version 2 in Q1 2026, supporting "video input" to predict temporal trajectories of movable components, providing support for dynamic scene policy learning.

Paper: https://arxiv.org/pdf/2511.13648