Maya Research has recently released Maya1, a text-to-speech model with 3 billion parameters. It can convert text and short descriptions into controllable and expressive speech and can run in real-time on a single GPU. The core function of Maya1 is to capture real human emotions and precise sound design.

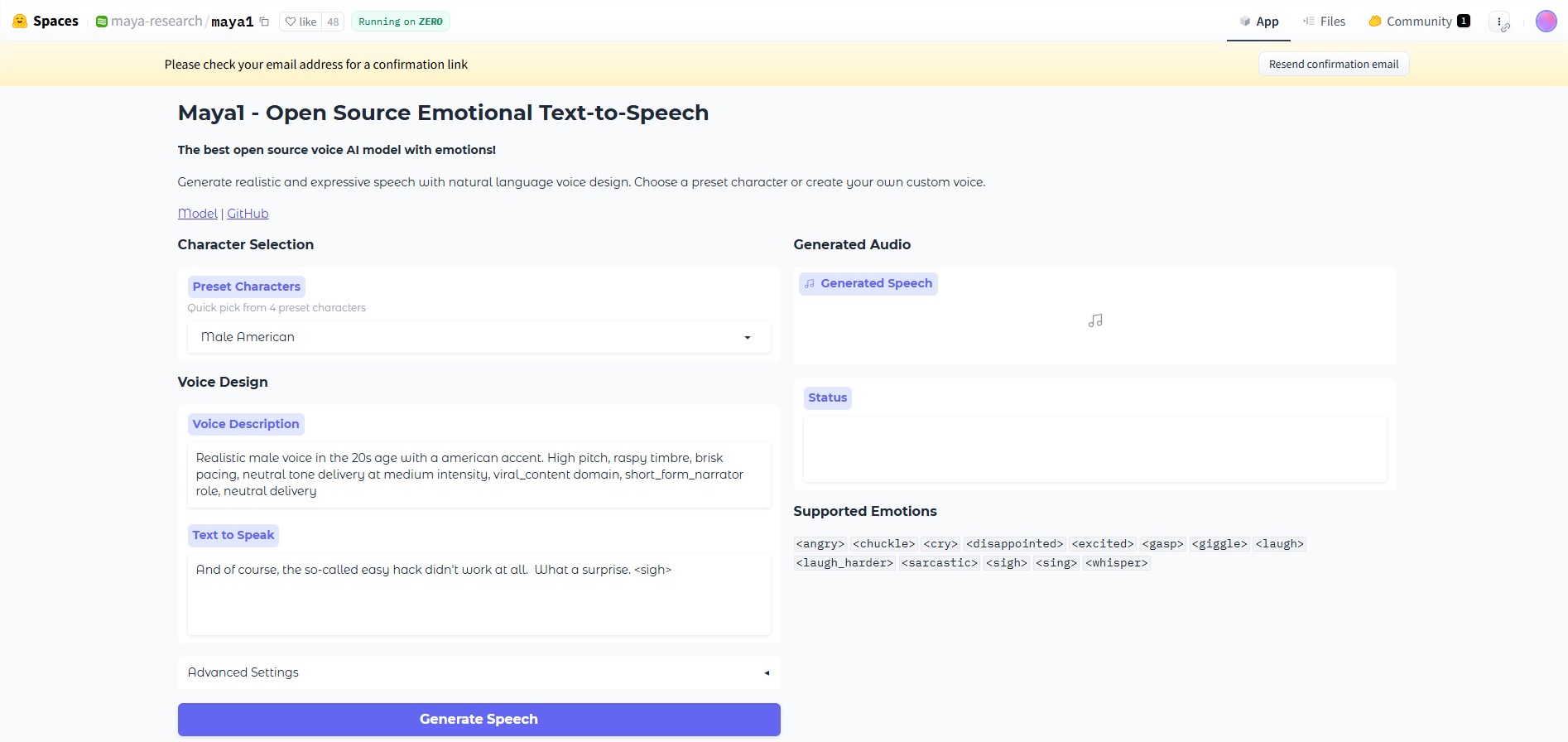

The operation interface of Maya1 has two inputs: natural language speech descriptions and the text to be read. For example, users can input "a woman in her 20s, British accent, energetic, clear pronunciation" or "a demon character, male voice, low pitch, hoarse tone, slow pace". The model combines these two signals to generate audio that matches the content and descriptive style. In addition, users can insert emotional tags in the text, such as <laugh>, <sigh>, <whisper>, etc., with more than 20 emotions available for selection.

The audio output by Maya1 is 24kHz mono and supports real-time streaming, making it very suitable for assistants, interactive agents, games, podcasts, and live content. The Maya Research team claims that the performance of this model exceeds many top proprietary systems, and it is completely open source, licensed under Apache 2.0.

In terms of architecture, Maya1 is a decoder-only transformer, using a structure similar to Llama. It does not directly predict the original waveform, but instead predicts encoding through a neural audio encoder called SNAC. The entire generation process includes text processing, encoding generation, and audio decoding, which can effectively improve generation efficiency and facilitate scalability.

The training data for Maya1 comes from an internet-scale English speech corpus, aiming to learn a wide range of acoustic coverage and natural coherence. Subsequently, it was fine-tuned on a carefully selected proprietary dataset that contains verified human speech descriptions and various emotional tags.

To perform inference and deployment on a single GPU, Maya1 recommends a graphics card with 16GB or more memory, such as A100, H100, or RTX4090. In addition, the Maya Research team also provides a series of tools and scripts to help users with real-time audio generation and streaming support.

huggingface:https://huggingface.co/spaces/maya-research/maya1

Key points:

🎤 Maya1 is an open-source text-to-speech model with 3 billion parameters that can generate expressive audio in real-time.

💡 This model combines natural language descriptions and text input, supporting multiple emotional tags to enhance speech expressiveness.

🚀 Maya1 can run on a single GPU and provides various tools to support efficient inference and deployment.