Google Maps is evolving from a navigation tool into an AI-driven spatial intelligence platform. Recently, Google announced the full integration of the Gemini large model into its map ecosystem, launching three core AI capabilities: Builder Agent (Building Agent), MCP Server (Model Context Protocol), and Grounding Lite (Lightweight Knowledge Anchoring). These not only empower developers to create interactive map applications without coding, but also provide ordinary users with smarter, scenario-based services.

Generate interactive maps in one sentence, revolutionizing developer efficiency

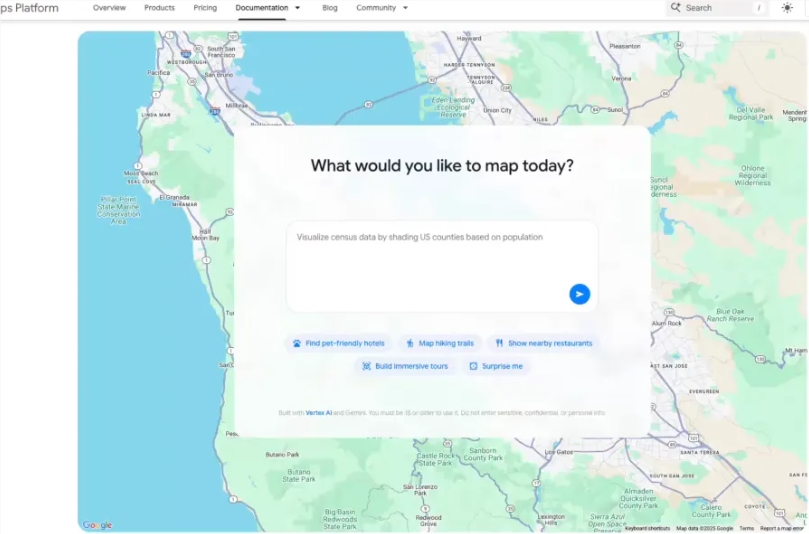

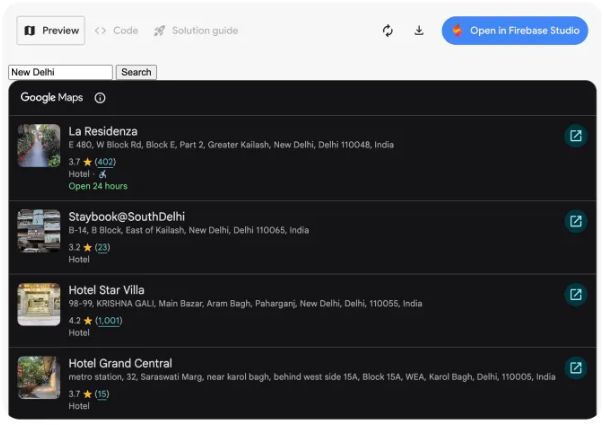

The new Builder Agent has brought map development into the "natural language era." Developers need only input statements like "Create a city street view tour," "Visualize real-time weather in my area," or "List all pet-friendly hotels in the city," and the AI will automatically generate a complete code prototype. The generated project can be previewed and modified directly in Firebase Studio, and it supports exporting or binding your own Google Maps API key for deployment. The accompanying Styling Agent can also automatically customize map color schemes and UI styles according to brand tone, helping companies quickly create visually unified geographic information products.

MCP Server: AI agents connect directly to map technical documentation

A deeper innovation comes from the introduction of the MCP (Model Context Protocol) server. This standard protocol allows AI assistants to directly access the complete technical documentation library of Google Maps. For example, when a developer asks, "How to use the API to avoid toll roads?" they can get precise code examples and parameter explanations. Combined with the Gemini command-line extension launched last month, the barrier to map development is further reduced.

Lightweight knowledge anchoring, making AI answers "visible"

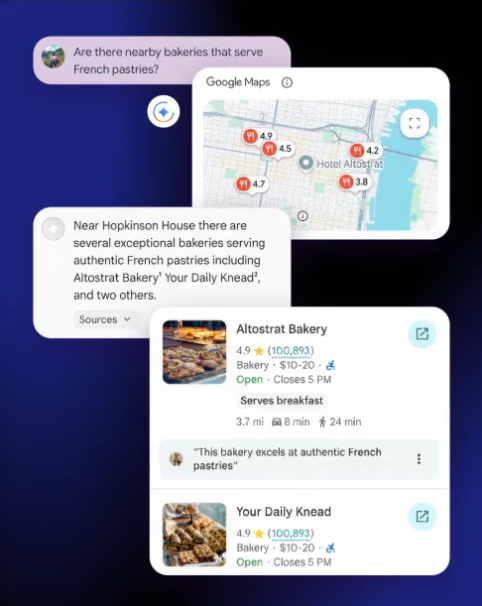

For consumers, Google has introduced the Grounding Lite feature, which allows third-party AI models to access map data via MCP, enabling high-precision spatial Q&A. For example, when a user asks, "How far is the nearest supermarket?" the AI not only provides the distance, but also synchronously displays three types of visual answers—lists, 2D maps, or 3D street views—through the Contextual View component, achieving "what you ask is what you see."

Consumer-side upgrades: Voice navigation without activation now available in India

On the consumer side, Google Maps now supports voice navigation with Gemini without manual activation (for example, saying "Take me to a gas station" while driving will trigger it), and has added accident alerts and real-time speed limit notifications in some areas of India, enhancing travel safety.

AIbase believes that this upgrade by Google marks the evolution of map services from "location query tools" to "spatial intelligence hubs"—for developers, it's a low-code AI development platform; for users, it's a embodied AI agent; for the ecosystem, it's a bridge connecting the physical world and digital intelligence. When maps can not only "tell you where you are," but also "help you build the world," the future of spatial computing has already begun.