The barrier to audio creation has been completely broken. Domestic AI unicorn StepStepFun AI officially launched the world's leading LLM-level audio editing model - Step-Audio-EditX on November 9th, achieving a revolutionary experience of "editing voice with natural language instructions" for the first time. Users just need to input "change this sentence into the arrogant tone of a Sichuan-Chongqing rapper" or "add a shy laugh at the end," and the model can accurately adjust the voice, emotion, rhythm, and even breathing pauses, making voice editing as intuitive and efficient as editing a document.

3 Billion Parameters, Performance Improves

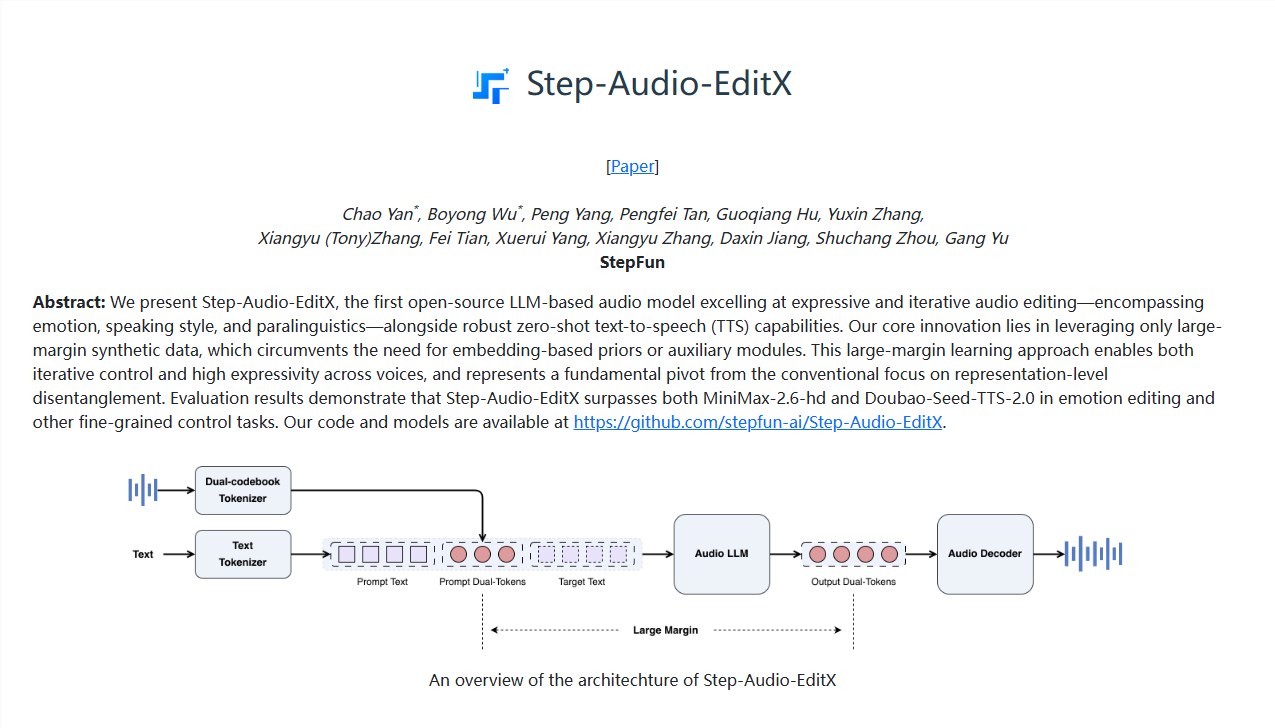

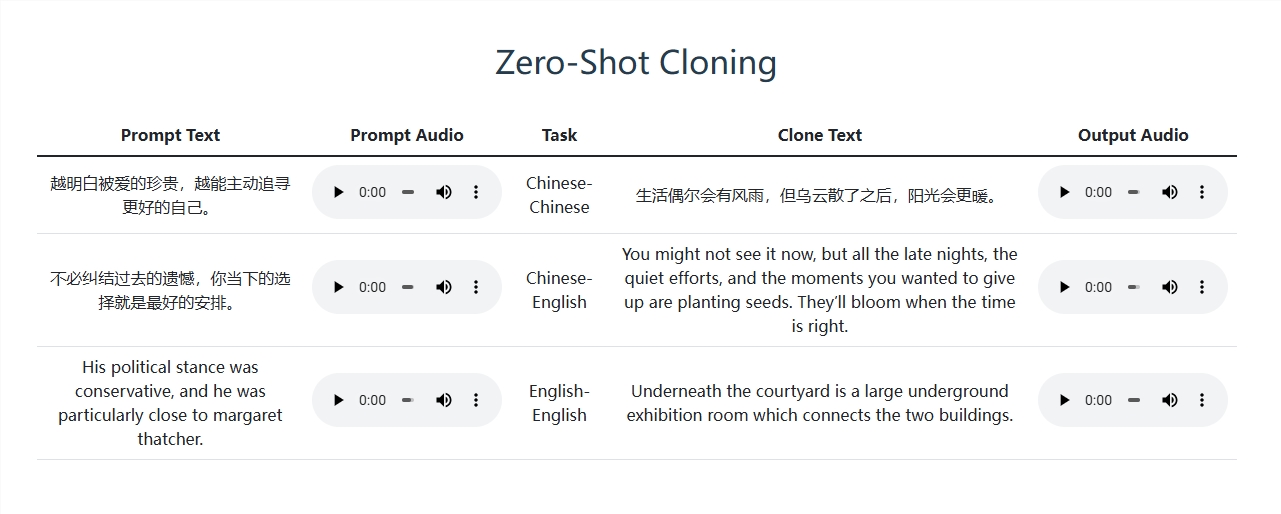

The core breakthrough of Step-Audio-EditX lies in its ultra-efficient model compression technology. The team refined the original 13 Billion parameter model to just 3 Billion, not only significantly reducing deployment costs but also achieving a breakthrough in key indicators. The model supports zero-shot voice cloning - with just one reference audio, no training data from the target person is needed, and it can high-fidelity reproduce their voice; it also supports multi-round iterative editing, allowing users to issue detailed instructions continuously (such as "be a little gentler" or "extend the laughter by 0.3 seconds"), gradually approaching the desired effect.

Dialects and Emotions, Perfectly Mastered

The model's understanding of the Chinese context is particularly impressive, supporting Mandarin, English, Sichuan dialect, and Cantonese fluently. The regional emotions and pragmatic habits in dialect expressions are naturally and realistically presented. In blind tests, evaluators unanimously believed that its "Sichuan-Chongqing jokes' street sense" and "delicacy of Cantonese particles" far exceed similar products.

Challenging Closed-Source Commercial Models, Leading in Three Key Indicators

According to data obtained by AIbase, Step-Audio-EditX outperforms closed-source solutions such as Minimax and ByteDance Doubao in three core dimensions:

Naturalness score: 4.72/5 (Minimax 4.51, Doubao 4.38)

Emotion accuracy: 93.7% (leading the second place by 6.2 percentage points)

Voice consistency: 98.1%, almost lossless reproduction

Explosive Application Scenarios: From Short Videos to Accessibility Services

This technology is giving rise to new content forms:

Short video bloggers can switch between "cheerful girl" and "sarcastic instructor" voices with one click;

Audiobook creators can complete multiple character emotional dialogues alone;

Sichuan dialect comedy videos reworked by AI can instantly become American-style stand-up comedy for export;

Speech synthesis systems for hearing-impaired users now have "emotional warmth," no longer cold and mechanical.

AIbase believes that the significance of Step-Audio-EditX goes beyond tool upgrades - it is reshaping the logic of audio content production. When voice is no longer a linear medium that is fixed once recorded, but becomes a "living text" that can be repeatedly refined, millions of creators will gain unprecedented expressive freedom. Next, if StepStepFun opens an API or integrates into mobile systems, this "AI magic scissors" may truly enter everyone's pocket, allowing every voice to be reimagined.

Product Entry: https://stepaudiollm.github.io/step-audio-editx/