In an era where text generation and image generation have been thoroughly reshaped by large models, speech editing remains the most difficult domain to operate intuitively "like writing text." Recently, StepFun AI has released a brand-new open-source project Step-Audio-EditX, which is changing this situation. The model is based on a 3 billion parameter audio language model (Audio LLM), and for the first time, it transforms speech editing into controllable operations at the level of text tokens, rather than traditional waveform signal processing tasks.

According to the team's latest paper arXiv:2511.03601, Step-Audio-EditX aims to allow developers to "directly edit the emotion, tone, style, and even breathing sounds of speech, just like editing a sentence in text."

From "Imitating Voices" to "Precise Control"

Currently, most zero-shot TTS systems can only copy emotions, accents, and timbres from short reference audio, sounding natural but lacking control. Style prompts in the text are often ignored, especially in cross-language and cross-style tasks, where results are unstable.

Step-Audio-EditX takes a completely different approach — instead of relying on complex disentangled encoder structures, it achieves controllability by changing data structures and training objectives. The model learns from a large number of speech pairs and speech triplets with identical text but significant differences in attributes, thus mastering how to adjust emotions, styles, and paralinguistic signals without changing the text.

Two Codebook Tokenizers and 3B Audio LLM Architecture

Step-Audio-EditX continues the two codebook tokenizers (Dual Codebook Tokenizer) from Step-Audio:

Language stream: sampling rate of 16.7Hz, containing 1024 tokens;

Semantic stream: sampling rate of 25Hz, containing 4096 tokens;

The two streams are interleaved in a 2:3 ratio, preserving prosody and emotional features in the speech.

On this basis, the research team built a compact 3 billion parameter audio LLM. The model is initialized using a text LLM and trained on a mixed corpus (ratio of text to audio tokens is 1:1). It can read text or audio tokens and always outputs dual codebook token sequences.

Audio reconstruction is handled by an independent decoder: the diffusion transformer flow matching module predicts the mel-spectrogram, and the BigVGANv2 vocoder converts it into a waveform. This entire module was trained on 200,000 hours of high-quality speech, significantly improving the naturalness of timbre and prosody.

Large Margin Learning and Synthetic Data Strategy

A key innovation of Step-Audio-EditX is "Large Margin Learning." The model trains on triplets and quadruplets samples, learning to convert between "significantly different" speech attributes while keeping the text unchanged.

The team used a dataset of 60,000 speakers covering Chinese, English, Cantonese, and Sichuan dialects, and built synthetic triplets to enhance emotional and stylistic control. Each sample consisted of 10-second clips recorded by professional voice actors, with neutral and emotional versions generated by StepTTS, then quality samples were selected through both human and model evaluations.

Paralinguistic (such as laughter, breathing, filler pauses) editing is based on the NVSpeech dataset, achieving temporal supervision through cloning and annotation, without the need for additional margin models.

SFT + PPO: Letting the Model Understand Instructions

The training is divided into two stages:

Supervised Fine-Tuning (SFT): The model simultaneously learns TTS and editing tasks within a unified chat format;

Reinforcement Learning (PPO): Optimizing responses to natural language instructions through a reward model.

The reward model is initialized with SFT checkpoints and trained on large-margin preference pairs using Bradley-Terry loss, calculating rewards directly at the token level without decoding waveforms. PPO then combines a KL penalty term to balance audio quality and bias.

Step-Audio-Edit-Test: AI Evaluation Standards

To quantify control capabilities, the team proposed the Step-Audio-Edit-Test benchmark, using Gemini2.5Pro as the review model, evaluating from three dimensions: emotion, style, and paralinguistic features.

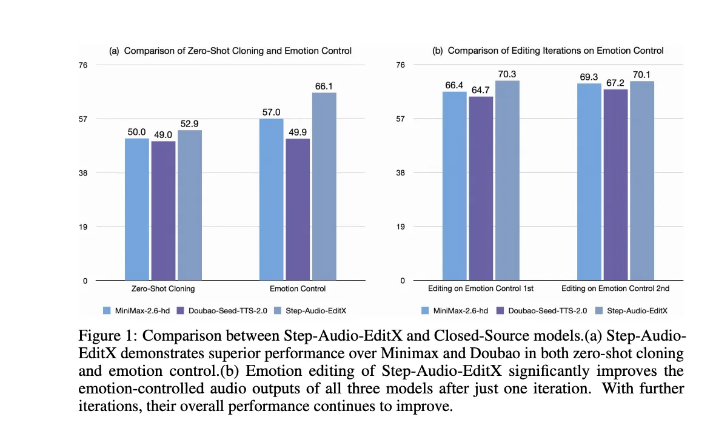

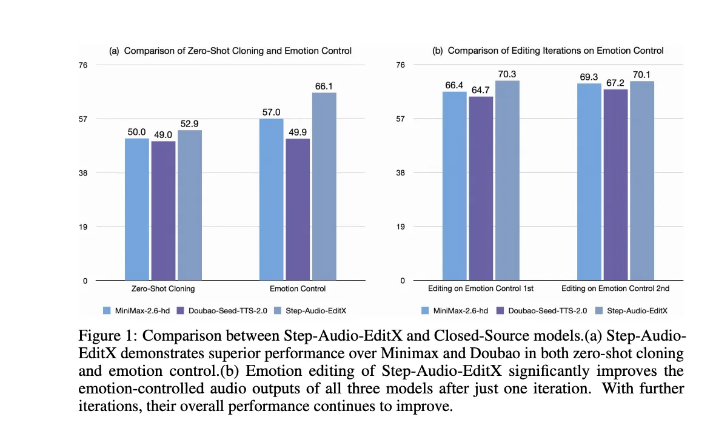

The results show:

Chinese emotion accuracy increased from 57.0% to 77.7%;

Style accuracy increased from 41.6% to 69.2%;

English results showed similar performance.

The average score for paralinguistic editing also rose from 1.91 to 2.89, approaching the level of mainstream commercial systems. More surprisingly, Step-Audio-EditX brings significant improvements to closed-source systems such as GPT-4o mini TTS, ElevenLabs v2, and Douba Seed TTS2.0.

Step-Audio-EditX represents a true leap in controllable speech synthesis. It abandons traditional waveform-level signal operations and instead uses discrete tokens, combined with large-margin learning and reinforcement optimization, making the experience of speech editing close to the fluidity of text editing for the first time.

In terms of technology and openness, StepFun AI chose full-stack open source (including model weights and training code), greatly reducing the barriers to speech editing research. This means that future developers can precisely control the emotions, tone, and paralinguistic features of speech, just like editing text.