OpenAI announced the launch of its new product Aardvark, an intelligent security research assistant based on GPT-5, designed to enhance software security. With tens of thousands of new vulnerabilities emerging each year, developers and security teams face significant challenges in identifying and fixing vulnerabilities. Aardvark is intended to help developers and security teams detect and fix security vulnerabilities in a more efficient manner.

Aardvark continuously analyzes source code repositories to identify vulnerabilities, assess their exploitability, prioritize them, and suggest fixes. Unlike traditional program analysis methods, Aardvark leverages the reasoning and tool usage capabilities of large language models (LLMs), reading code, analyzing, writing, and running tests like human security researchers.

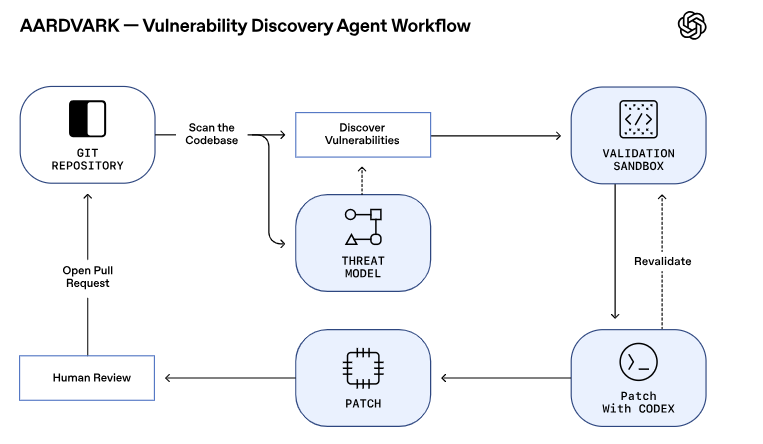

The workflow of Aardvark includes multiple stages. First, it analyzes the entire codebase to build a threat model reflecting the project's security goals and design. Then, when new code is submitted, Aardvark checks the changes for potential vulnerabilities. When first connecting to a code repository, it also scans the history to identify existing issues. Next, Aardvark verifies the exploitability of potential vulnerabilities in an isolated sandbox environment and details the steps taken. Finally, it integrates with OpenAI Codex to help developers generate patch fixes for identified vulnerabilities and facilitates easy manual review and one-click fixes.

In recent months, Aardvark has been tested in OpenAI's internal code repositories and some external partner projects, successfully identifying several critical vulnerabilities and positively impacting OpenAI's security defenses. In benchmark tests on the "golden" codebase, it identified 92% of known and synthetically introduced vulnerabilities, proving its efficiency and practicality.

In addition, Aardvark has been applied to open-source projects, identifying multiple vulnerabilities and conducting responsible disclosures. Currently, OpenAI plans to provide free scanning services for some non-commercial open-source projects, aiming to improve the security of the open-source software ecosystem.

As software becomes the foundation of all industries, the risks posed by software vulnerabilities are becoming increasingly evident. The launch of Aardvark marks a new model centered around defenders, capable of continuously protecting systems during code evolution. OpenAI has launched a private testing phase for Aardvark, inviting interested partners to participate and further validate its performance.

Official blog: https://openai.com/index/introducing-aardvark/

Key points:

🌟 Aardvark is an intelligent security research assistant launched by OpenAI that helps developers identify and fix software vulnerabilities.

🔍 It achieves efficient vulnerability detection by analyzing code repositories, building threat models, and verifying the exploitability of vulnerabilities.

🤝 OpenAI plans to provide free scanning services for non-commercial open-source projects, enhancing the security of the entire open-source ecosystem.