Recently, the Shanghai Artificial Intelligence Laboratory and other institutions jointly launched IWR-Bench, which is the first benchmark specifically designed to evaluate the ability of large language models to convert videos into interactive web code. This benchmark aims to more realistically assess the capabilities of multimodal large language models (LVLMs) in dynamic web reconstruction, filling the gap in dynamic interaction evaluation within the AI front-end development field.

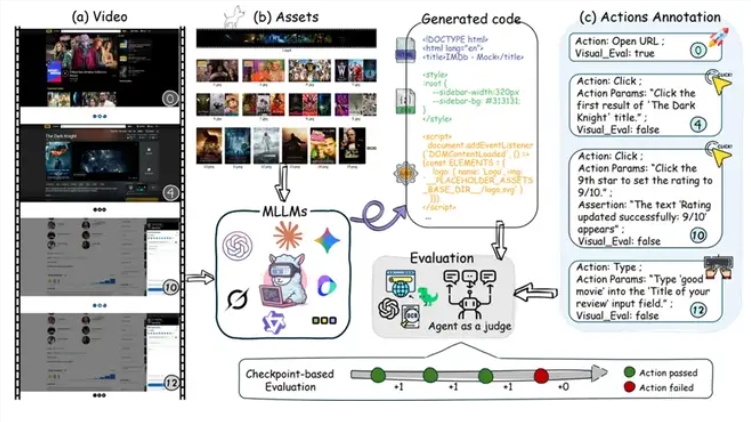

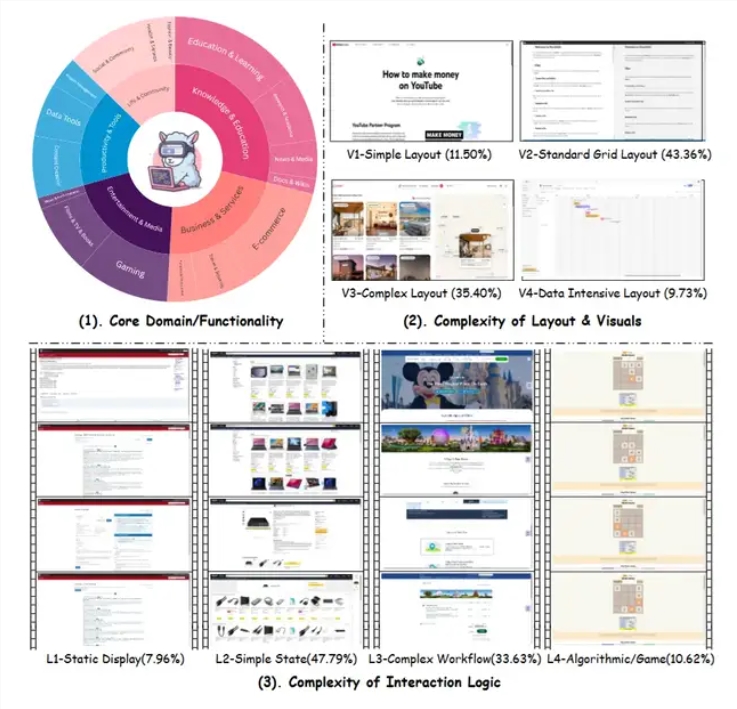

Differing from traditional image-to-code tasks, IWR-Bench requires models to watch videos that record the complete user operation process, combined with all static resources required for a webpage, to reconstruct the dynamic interactive behavior of the webpage. The complexity of the task ranges from simple web browsing to complex game rule reconstruction, including various application scenarios such as the 2048 game and flight booking.

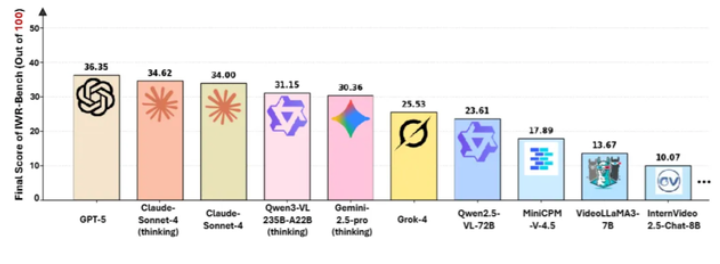

Test results showed significant limitations of current AI models in this task. Among the evaluation of 28 mainstream models, the best-performing GPT-5 achieved a comprehensive score of only 36.35, with an interaction function correctness (IFS) score of 24.39% and a visual fidelity (VFS) score of 64.25%. These data clearly reflect that models are relatively strong in visual restoration but have obvious shortcomings in implementing event-driven logic and dynamic interactive functions.

From the perspective of evaluation methods, IWR-Bench not only focuses on the model's visual restoration capability but also automatically evaluates the correctness of its interactive functions through proxy assessments. Each task provides complete static resources, and all file names are anonymized, forcing models to rely on visual matching rather than semantic reasoning to associate resources. This design is closer to real development scenarios, requiring models to understand causal relationships and state changes in operation videos and then convert them into executable code logic.

Researchers also found some interesting phenomena. Model versions with "thinking" mechanisms performed better in certain tasks, but the improvement was limited, indicating that the capabilities of the base model remain decisive. Furthermore, models specifically optimized for video understanding did not perform as well as general multimodal models in this task, indicating that there are essential differences between the video-to-webpage task and traditional video understanding tasks—this task requires not only understanding video content but also abstracting dynamic behavior into program logic.

From a technical challenge perspective, the difficulty of the video-to-webpage task lies in multiple aspects. First, temporal understanding, where the model needs to extract key interaction events and state transitions from continuous video frames. Second, logical abstraction, which involves transforming observed behavioral patterns into programming concepts like event listeners and state management. Third, resource matching, accurately identifying corresponding images, styles, and other files in anonymized static resources. Fourth, code generation, producing HTML, CSS, and JavaScript code that is structurally sound and logically correct.

GPT-5 only achieved a comprehensive score of 36.35, indicating that even the most advanced multimodal models still have significant room for improvement in converting dynamic behaviors into executable code. A 24.39% interaction function correctness rate means that more than three-quarters of the interactive functions in the generated webpages have issues. This may include incorrect event responses, state management errors, and missing business logic.

The launch of IWR-Bench holds significant importance for AI research and applications. From a research perspective, it provides a new evaluation dimension for the dynamic understanding and code generation capabilities of multimodal models, helping identify current technical weaknesses. From an application perspective, if the video-to-webpage capability matures, it can significantly reduce the barrier to front-end development, allowing non-technical personnel to generate functional prototypes through demonstration operations.

However, it should be noted that even if a model achieves high scores on this benchmark, it is still far from practical application. Real web development involves multiple dimensions such as performance optimization, compatibility handling, security protection, and maintainability, which are difficult to fully convey through video demonstrations. Additionally, complex business logic, edge case handling, and user experience details are also challenging to infer solely from operation videos.

From an industry trend perspective, IWR-Bench represents the evolution direction of AI code generation from static to dynamic, from single frames to multi-frames, and from descriptions to demonstrations. This contrasts with the current mode of AI coding assistants that mainly rely on text descriptions, providing a technical foundation for intelligent development tools that offer a "what you see is what you get" experience. If future models achieve breakthroughs in this task, they may give rise to a new generation of prototype development tools, allowing product managers or designers to generate interactive web prototypes by recording operation videos.

From the test results, it is clear that current AI models are still in the early stages of understanding complex dynamic interactions. A visual fidelity of 64.25% is relatively high, indicating that models can better restore the static appearance of the page. However, an interaction function correctness of only 24.39% shows that transforming observed behaviors into correct program logic remains a major challenge. This gap reflects the gap between "understanding" and "doing it right"—being able to recognize visual elements does not equate to understanding the underlying interaction logic.

The significance of IWR-Bench goes beyond providing an evaluation tool; it also clarifies an important direction for the development of AI multimodal capabilities. As this benchmark is promoted, it is expected that more research will focus on the integration of dynamic behavior understanding, temporal reasoning, and code generation, driving the practical application value of multimodal large models in real development scenarios.