The Alibaba Natural Language Processing team announced the release of WebWatcher, an open-source multimodal deep research intelligent agent designed to overcome the limitations of existing closed-source systems and open-source agents in the field of multimodal deep research. WebWatcher integrates various tools such as web browsing, image search, code interpreter, and internal OCR, enabling it to handle complex multimodal tasks like human researchers, demonstrating strong visual understanding, logical reasoning, knowledge retrieval, tool scheduling, and self-verification capabilities.

The development team of WebWatcher pointed out that although existing closed-source systems such as OpenAI's DeepResearch perform well in text-based deep research, they are mostly limited to pure text environments and struggle to handle complex images, charts, and mixed content in the real world. Existing open-source agents also face two major bottlenecks: one type focuses on text retrieval agents, which can integrate information but cannot process images; the other type is visual agents, which can recognize images but lack cross-modal reasoning and multi-tool collaboration capabilities. WebWatcher was specifically designed to address these bottlenecks.

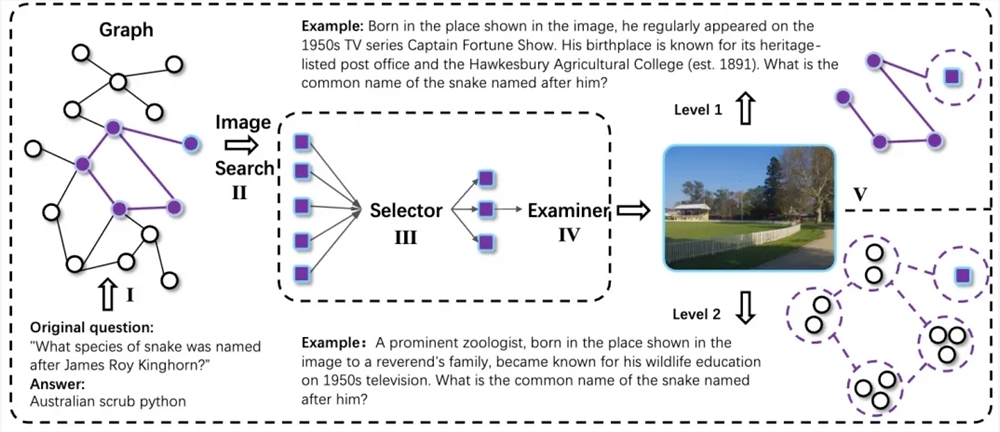

WebWatcher's technical solution covers the entire workflow from data construction to training optimization. Its core goal is to enable multimodal agents to have flexible reasoning and multi-tool collaboration capabilities in high-difficulty multimodal deep research tasks. To achieve this, the research team designed an automated multimodal data generation process, collecting cross-modal knowledge chains through random walks and introducing information blurring technology to increase the uncertainty and complexity of tasks. All complex problem samples are expanded into multimodal versions through a QA-to-VQA conversion module, further enhancing the model's cross-modal understanding capabilities.

In terms of building high-quality reasoning trajectories and post-training, WebWatcher adopted an Action-Observation-driven trajectory generation method. By collecting real-world multi-tool interaction trajectories and performing supervised fine-tuning (SFT), the model quickly grasps the basic patterns of multimodal ReAct-style reasoning and tool calling in the early stages of training. Subsequently, the model enters the reinforcement learning phase, further improving the decision-making capabilities of the multimodal agent in complex environments through GRPO.

To comprehensively verify the capabilities of WebWatcher, the research team proposed BrowseComp-VL, an extension version of BrowseComp for vision-language tasks, aiming to approach the difficulty of cross-modal research tasks performed by human experts. In multi-round rigorous evaluations, WebWatcher significantly outperformed current mainstream open-source and closed-source multimodal large models in complex reasoning, information retrieval, knowledge integration, and information optimization tasks.

Specifically, on the Humanity’s Last Exam (HLE-VL) benchmark, a multi-step complex reasoning benchmark, WebWatcher achieved a Pass@1 score of 13.6%, far surpassing representative models such as GPT-4o (9.8%), Gemini 2.5-flash (9.2%), and Qwen2.5-VL-72B (8.6%). In the MMSearch evaluation, which is more closely aligned with real-world multimodal search, WebWatcher achieved a Pass@1 score of 55.3%, significantly outperforming Gemini 2.5-flash (43.9%) and GPT-4o (24.1%). In the LiveVQA evaluation, WebWatcher achieved a Pass@1 score of 58.7%, leading other mainstream models. On the most comprehensive and challenging BrowseComp-VL benchmark, WebWatcher achieved an average score (Pass@1) of 27.0%, leading by more than double.

Repository: https://github.com/Alibaba-NLP/WebAgent