In the field of large language models (LLMs), text data segmentation has always been a key research direction. Traditional tokenization techniques, such as Byte Pair Encoding (BPE), typically split text into fixed units before processing and build a static vocabulary based on this. Although widely used, this approach has limitations. Once tokenization is completed, the model's processing method cannot be flexibly adjusted, and its performance is particularly unsatisfactory when dealing with low-resource languages or special character structures.

To address these issues, Meta's research team introduced an innovative architecture called AU-Net. AU-Net changes the traditional text processing model by using a self-regressive U-Net structure, allowing it to learn directly from raw bytes, flexibly combining bytes into words and phrases, and even forming combinations of up to four words, creating multi-level sequence representations.

The design of AU-Net is inspired by the U-Net architecture in the field of medical image segmentation, featuring a unique contraction path and expansion path. The contraction path is responsible for compressing the input byte sequence, merging it into higher-level semantic units to extract the macro semantic information of the text. The expansion path then gradually restores this high-level information back to the original sequence length, while integrating local details, enabling the model to capture key features of the text at different levels.

The contraction path of AU-Net is divided into multiple stages. In the first stage, the model directly processes raw bytes, using a limited attention mechanism to ensure computational feasibility. In the second stage, the model performs pooling at word boundaries, abstracting byte information into word-level semantic information. In the third stage, pooling operations are performed between every two words, capturing broader semantic information and enhancing the model's understanding of the text's meaning.

The expansion path is responsible for gradually restoring the compressed information, using a multilinear upsampling strategy that allows each position's vector to adjust according to its relative position in the sequence, optimizing the fusion of high-level information and local details. In addition, the design of skip connections ensures that important local detail information is not lost during the restoration process, thereby improving the model's generation capability and prediction accuracy.

During the inference phase, AU-Net adopts a self-regressive generation mechanism, ensuring that the generated text is coherent and accurate while improving inference efficiency. This innovative architecture provides new insights for the development of large language models, demonstrating stronger flexibility and applicability.

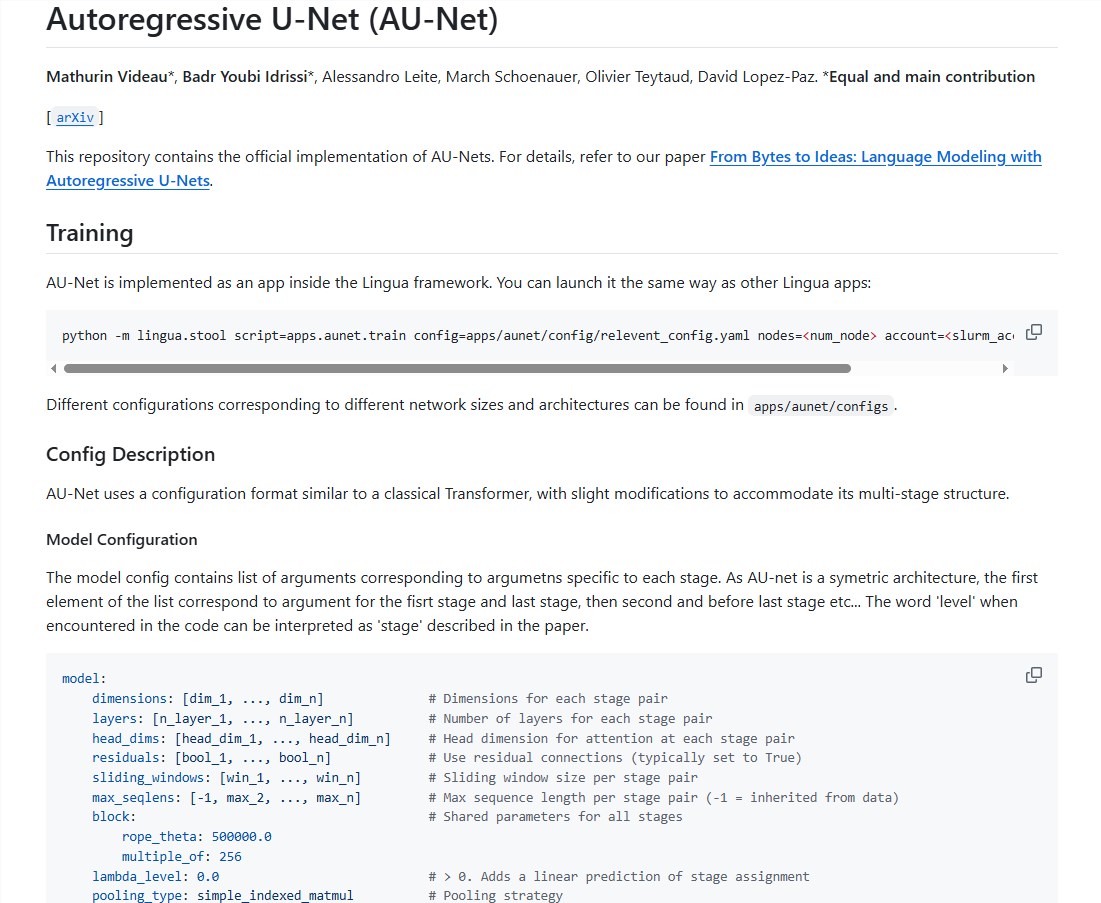

Open source address: https://github.com/facebookresearch/lingua/tree/main/apps/aunet

Key points:

- 🚀 The AU-Net architecture dynamically combines bytes through a self-regressive approach to form multi-level sequence representations.

- 📊 It uses contraction and expansion paths to ensure effective integration of macro semantic information and local details.

- ⏩ The self-regressive generation mechanism improves inference efficiency and ensures the coherence and accuracy of text generation.