Recently, the Seed team at ByteDance has collaborated with the University of Hong Kong and Fudan University to introduce an innovative reinforcement learning training method called POLARIS. This method successfully enhances the mathematical reasoning ability of small models to a level comparable to that of large models through a carefully designed Scaling RL strategy, offering a new path for optimizing small models in the field of artificial intelligence.

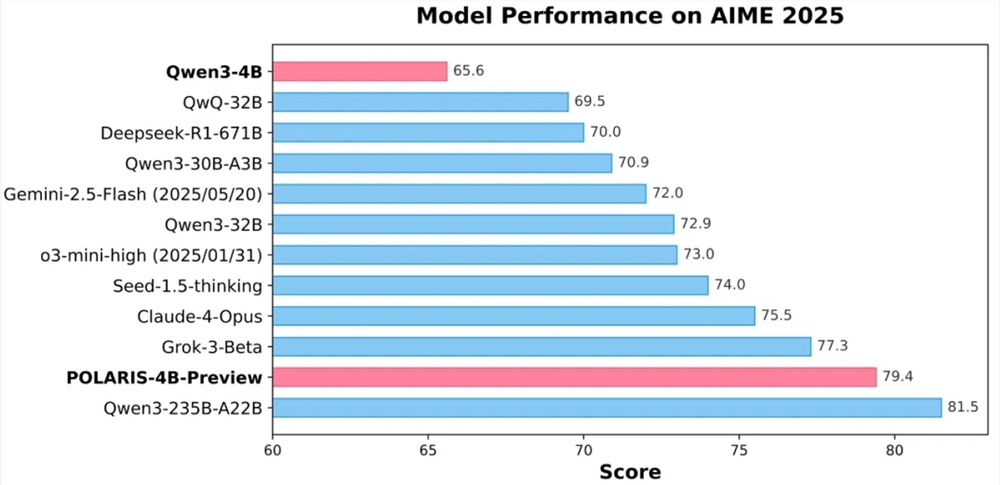

Experimental results show that the 4 billion parameter open-source model Qwen3-4B trained with POLARIS achieved high accuracy rates of 79.4% and 81.2% on the AIME25 and AIME24 math tests, outperforming some larger closed-source models. Notably, the lightweight design of the POLARIS-4B model allows it to be easily deployed on consumer-grade graphics cards, significantly lowering the application threshold.

The core innovation of POLARIS lies in its training strategy. The research team found that customizing training data and hyperparameters around the model being trained can significantly enhance the mathematical reasoning ability of small models. In practice, the team dynamically adjusted the difficulty distribution of the training data, creating a dataset slightly biased towards difficult problems to avoid excessive concentration of sample difficulty. Additionally, they introduced a dynamic data update strategy, removing overly easy samples in real-time based on the model's performance during training to ensure the effectiveness of the training.

In terms of sampling control, POLARIS fine-tunes the sampling temperature to balance model performance and the diversity of generated paths. Research shows that the sampling temperature has a significant impact on model performance and path diversity. Too high or too low a temperature is detrimental to model training. Therefore, the team proposed a temperature initialization method that controls the exploration area and dynamically adjusts the sampling temperature during training to maintain the diversity of generated content.

To address the challenges of long-context training, POLARIS introduces length extrapolation technology. By adjusting the position encoding RoPE, the model can handle longer sequences than those seen during training. This innovative strategy effectively compensates for the shortcomings in long-text training, improving the model's performance in long-text generation tasks.

Additionally, POLARIS adopts a multi-stage RL training method. In the early stages, training is conducted using a shorter context window, and once the model's performance stabilizes, the context window length is gradually increased. This strategy helps the model adapt step by step to more complex reasoning tasks, enhancing the stability and effectiveness of the training.

Currently, the detailed training methods, training data, training code, and experimental models of POLARIS have all been made open source. The research team has verified the effectiveness of POLARIS on multiple mainstream reasoning evaluation sets. The results show that models of different sizes and different model families achieve significant improvements in performance after applying the POLARIS training method.

GitHub Homepage:

https://github.com/ChenxinAn-fdu/POLARIS

Hugging Face Homepage:

https://huggingface.co/POLARIS-Project