Microsoft has open-sourced the latest version of the Phi-4 family model — Phi-4-mini-flash-reasoning — on its official website today. This new version, building upon the advantages of the Phi-4 series — small parameters and strong performance — is specifically designed for scenarios with limitations in computing power, memory, and latency. It can run on a single GPU and is particularly suitable for edge devices such as laptops and tablets.

The release of Phi-4-mini-flash-reasoning marks a significant improvement in reasoning efficiency. Compared to the previous version, its reasoning efficiency has increased by 10 times, and the average latency has been reduced by 2 to 3 times. This substantial performance boost makes it especially effective for advanced mathematical reasoning, making it highly suitable for applications in education and research.

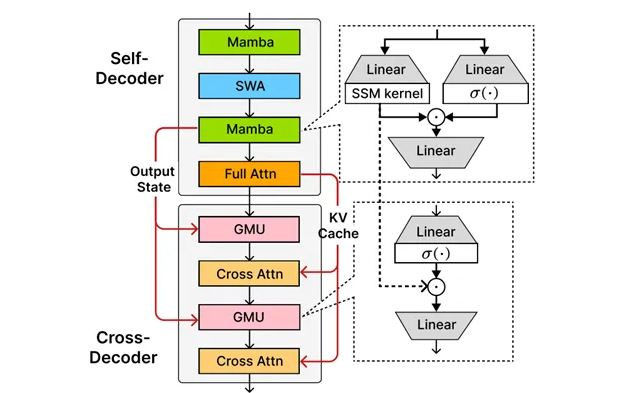

The core of this version lies in Microsoft's self-developed SambaY architecture. SambaY is an innovative decoder hybrid architecture, jointly developed by Microsoft and Stanford University. By introducing gated memory units, SambaY enables efficient cross-layer memory sharing, thus improving decoding efficiency while maintaining linear pre-fill time complexity, enhancing long context performance, and eliminating the need for explicit positional encoding.

In long text generation tasks, SambaY demonstrates significant efficiency improvements. When processing a 2K-length prompt and a 32K-length generation task, the decoding throughput is 10 times higher than that of the traditional Phi-4-mini-Reasoning model. Additionally, in tests of mathematical reasoning capabilities, SambaY shows remarkable improvements, especially in complex mathematical problems, where it can generate clear and logically coherent problem-solving steps.

Microsoft also evaluated the performance of SambaY in long context retrieval through benchmarks such as Phonebook and RULER. In a 32K-length context, SambaY achieved an accuracy rate of 78.13% in the Phonebook task, far surpassing other models. This proves the advantages of SambaY in long context understanding and generation capabilities.

To verify the scalability of SambaY, Microsoft conducted large-scale pre-training experiments using the 3.8B parameter Phi-4-mini-Flash model, trained on a dataset of 5T tokens. Although some challenges were encountered during training, the model ultimately converged successfully by introducing techniques such as label smoothing and attention dropout, achieving significant performance improvements in knowledge-intensive tasks.

Open-source address: https://huggingface.co/microsoft/Phi-4-mini-flash-reasoning

NVIDIA API: https://build.nvidia.com/microsoft

Key points:

🌟 Microsoft has launched Phi-4-mini-flash-reasoning, which improves reasoning efficiency by 10 times and is suitable for running on laptops.

🔍 The innovative SambaY architecture enhances decoding performance through efficient memory sharing, making it ideal for long text generation and mathematical reasoning.

📈 It performs exceptionally well in benchmark tests, achieving an accuracy rate of 78.13% in the Phonebook task, demonstrating strong long context understanding capabilities.