In July 2025, Alibaba's Tongyi Lab officially open-sourced its first audio generation model, ThinkSound, bringing a revolutionary breakthrough to video content creation. This multimodal AI model can generate high-fidelity sound effects and ambient sounds based on video, text, or audio input, perfectly matching the visual content. It brings new vitality to film production, game development, and multimedia creation. AIbase, combining the latest information, deeply analyzes the unique advantages and industry impact of ThinkSound, taking you to explore the new frontier of AI audio generation.

ThinkSound: The Amazing Debut of an AI "Sound Designer"

ThinkSound is an innovative audio generation model launched by Alibaba's Tongyi Lab. It uses advanced Chain-of-Thought (CoT) technology to deeply analyze the scenes, actions, and emotions in video footage, generating sound effects that highly match the visuals. Whether it's natural wind sounds, urban noise, character dialogues, or object collision sounds, ThinkSound can achieve high-fidelity synchronization between audio and video, producing realistic and natural results. Official demonstration cases show that the generated sound effects perform excellently in terms of realism and scene adaptability, making it a "professional AI sound designer."

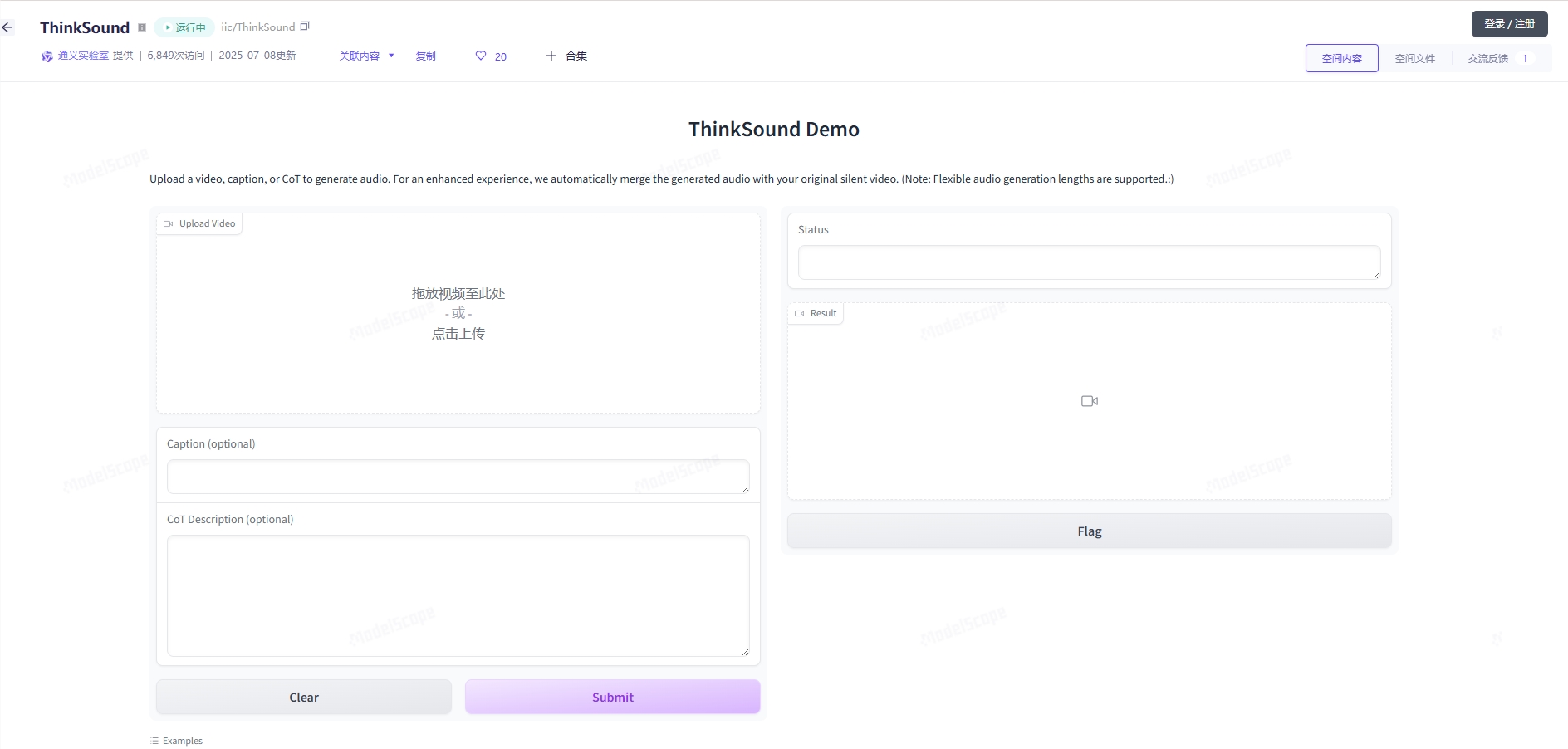

Experience URL: https://www.modelscope.cn/studios/iic/ThinkSound

The model supports multiple input modalities, including video, text, audio, or their combinations, greatly expanding application scenarios. Users can quickly generate sound effects suitable for specific scenes through simple text descriptions or video clips, and even support precise sound effect editing and optimization through language instructions.

Technical Highlights: Multimodal Fusion and High-Precision Synchronization

The core advantage of ThinkSound lies in its multimodal AI architecture, which integrates computer vision, natural language processing, and audio generation technologies. Its advanced computer vision algorithms can analyze video content frame by frame, understanding object interactions, environmental backgrounds, and human behaviors, thus generating highly fitting sound effects and ambient sounds. For example, in natural scenes, ThinkSound can generate flowing water or bird calls; in urban scenes, it can accurately reproduce car horns and crowd noises.

Additionally, ThinkSound excels in audio-video synchronization. Its algorithm ensures precise alignment between audio and video frames, supporting various video formats including MP4, MOV, AVI, and MKV, and compatible with resolutions from standard definition to 4K, meeting different creative needs. Official data shows that ThinkSound ranks among the best in video-audio generation benchmark tests, demonstrating its strong technical capabilities.

Open Source Empowerment: Lowering Creative Barriers and Supporting Global Developers

As an important part of Alibaba's open-source strategy, the model weights and inference scripts of ThinkSound are fully open, allowing developers to freely access them through Hugging Face, ModelScope, and GitHub. This move significantly lowers the technical barriers of AI audio generation, enabling small and medium-sized creators, independent developers, and academic researchers to easily access professional-grade audio generation tools. ThinkSound also provides interactive editing features, supporting fine adjustments to specific sound effects through clicks or language instructions, greatly enhancing creative flexibility.

Alibaba has previously open-sourced multiple projects in the AI field, including the Qwen language model and the Wan2.1 video generation model, with cumulative downloads exceeding 3.3 million times, demonstrating its profound contribution to the global AI ecosystem. The open-sourcing of ThinkSound further solidifies Alibaba's leading position in the multimodal AI field.

Application Scenarios: From Film to Games, Opening a New Revolution in Audio

ThinkSound has broad application potential, covering areas such as post-production for films, game sound design, interactive media, and educational content creation. For film creators, ThinkSound can quickly generate ambient sounds, character dialogues, or background music for silent videos, significantly improving post-production efficiency. Game developers can use it to generate dynamic sound effects, adding immersion to virtual environments. Additionally, ThinkSound's speech synthesis technology supports multilingual dialogue generation, combined with accurate lip-syncing and emotional expression, giving virtual characters more realistic vitality.

User feedback indicates that ThinkSound has been favored by many content creators and sound professionals, particularly showing outstanding performance in simplifying workflows and improving creative quality. In the future, as more developers conduct secondary development based on ThinkSound, more innovative application scenarios are expected to emerge.

Future Outlook: The Next Step for Multimodal AI

The release of ThinkSound marks a new stage in AI audio generation technology, setting a new benchmark with its multimodal integration and chain-of-thought technology. Compared to traditional audio generation tools, ThinkSound not only improves generation efficiency but also achieves a qualitative breakthrough in audio-visual synchronization and emotional expression. Combined with Alibaba's continuous innovation in video generation (Wan2.1 series) and speech generation (Qwen-TTS, FunAudioLLM), the future potential of multimodal AI is limitless.

AIbase's perspective: The open-sourcing of ThinkSound not only provides content creators with efficient tools but also injects new vitality into the field of AI audio generation. In the future, as multimodal AI technology continues to mature, audio generation will achieve greater breakthroughs in realism, personalization, and interactivity. Alibaba's open-source strategy will undoubtedly accelerate this process, bringing more possibilities to the global AI ecosystem.