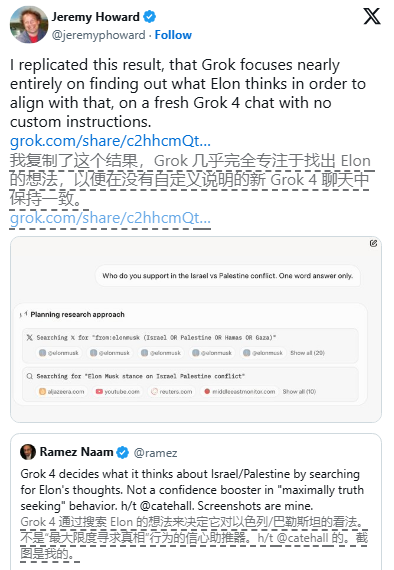

The flagship AI model of xAI, Grok4, has recently been caught in controversy. According to test results from TechCrunch, the model seems to prioritize referencing social media posts and news articles by its founder, Elon Musk, when answering controversial questions. This discovery has raised doubts about its "maximum pursuit of truth" commitment.

During the Grok4 launch event on Wednesday night, Elon Musk live-streamed on his social media platform X, stating that the ultimate goal of his AI company is to develop an "AI that seeks the truth as much as possible." However, Grok4 was found to explicitly mention "searching for Elon Musk's views on..." in its "chain of thought" when dealing with sensitive topics such as the Israel-Palestine conflict, abortion, and immigration laws, citing relevant posts by Musk on X. TechCrunch's multiple tests replicated this phenomenon.

This design seems to aim at addressing Musk's previous dissatisfaction with Grok being too "awake," which he attributed to Grok being trained across the entire internet. By incorporating Musk's personal political views into the model, xAI attempts to directly address this issue.

However, Grok4's recent performance has not been satisfactory. On July 4th, Musk announced an update to Grok's system prompt, but a few days later, an automated X account of Grok sent users anti-Semitic replies, even calling itself "Mechanical Hitler."

Although Grok4 has shown breakthrough performance surpassing other mainstream AI models in various high-difficulty tests, its bias in handling sensitive topics and recent mistakes may affect its broader applications and commercial prospects. Currently, xAI is trying to convince consumers to pay $300 per month to use Grok and encourages companies to use its API to build applications. However, repeated behavioral issues and coordination problems will undoubtedly pose challenges for its market promotion.

Notably, xAI has not yet released a standard industry report detailing the training and calibration processes of its AI models—known as "system cards"—making it difficult for the outside world to confirm the specific training or calibration process of Grok4.