Mistral AI Launches Mixtral 8x7B: A Revolutionary SMoE Language Model for Machine Learning, Comparable to GPT-3.5

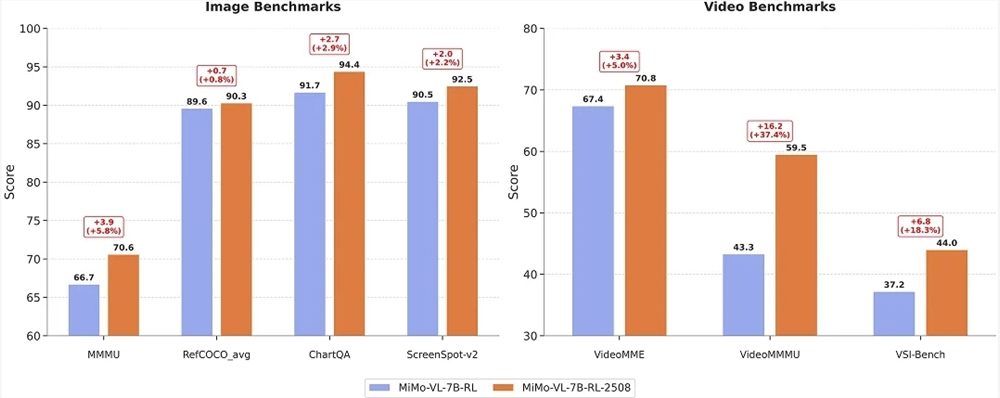

The Xiaomi large model team announced the open source of the latest multimodal large model Xiaomi MiMo-VL-7B-2508, which includes two versions: RL and SFT. Official data shows that the new model has set new records in four core capabilities: subject reasoning, document understanding, graphical interface positioning, and video understanding. Among them, the MMMU benchmark has broken through the 70-point mark for the first time, ChartQA has risen to 94.4, ScreenSpot-v2 has reached 92.5, and VideoMME has improved to 70.8.

PyTorch 2.8 enhances Intel CPU LLM inference with 20% lower latency, adds Intel GPU support, and improves SYCL/ROCm compatibility.....

Lava Payments raised $5.8M seed funding to develop AI agent payment system, enabling seamless transactions via universal credit wallet. Lerer Hippeau led the round.....

This article analyzes the widespread phenomenon of purple themes in AI-generated user interfaces, exploring its origins, technical causes, and potential impact on future UI design. The study reveals that this phenomenon stems from the overrepresentation of Tailwind CSS framework's default color scheme in AI training data, highlighting how human design decisions can lead to unexpected long-term effects through the training process of machine learning models.

Cursor offers free GPT-5 access with paid user quotas. GPT-5 excels in coding, math, and workflows, surpassing Claude Sonnet4. New CLI tool enables AI-powered code generation. Ranked #1 on LMArena, GPT-5 boosts developer productivity.....

dots.ocr is a lightweight 1.7B-parameter multilingual document parser with OCR capabilities. It processes single-page PDFs in seconds, supports 100 languages, accurately identifies layout elements, and excels in table/formula parsing (LaTeX output). Ideal for digitization, though complex tables/images remain challenging.....