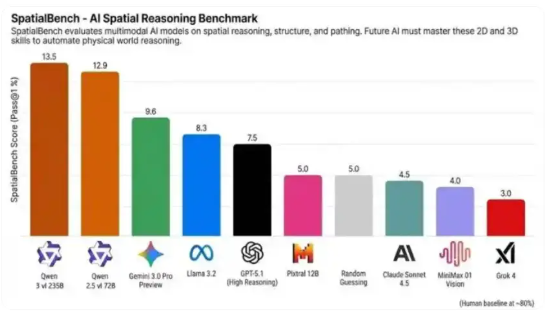

Alibaba Qwen's vision model secured the top two positions in the third-party spatial reasoning benchmark SpatialBench: Qwen3-VL with 13.5 points, and Qwen2.5-VL with 12.9 points, significantly outperforming Gemini 3.0 Pro Preview (9.6 points) and GPT-5.1 (7.5 points), getting closer to the human baseline of 80 points.

Features of the Benchmark

SpatialBench focuses on 2D/3D spatial, structural, and path reasoning, including complex tasks such as circuit analysis, CAD engineering, and molecular biology, and is regarded as the "litmus test for embodied intelligence."

Model Highlights

- 3D Detection Upgrade: Qwen3-VL adds rotated bounding box output and depth estimation heads, increasing AP by 18% in occluded scenes, allowing the model to determine object orientation and perspective changes

- Visual Programming: Input a sketch or a 10-second video to generate executable Python + OpenCV code, achieving "what you see is what you get."

- Diverse Scale: Offers dense models of 2B/4B/8B/32B, as well as MoE versions such as 30B-A3B and 235B-A22B, with the inference version surpassing Gemini 2.5-Pro by an average of 6.4 points across 32 core capabilities.

Open Source Schedule

Qwen2.5-VL has been fully open-sourced; Qwen3-VL is expected to release weights and toolchain in Q2 2025, while also launching the Qwen App for free experience.

Implementation Progress

Alibaba Cloud revealed that Qwen3-VL has already entered proof-of-concept stages in logistics robots, AR assembly, smart ports, and other scenarios, with spatial positioning error less than 2cm. In 2026, it will launch an "vision-action" end-to-end model to provide real-time visual servoing capabilities for robots.