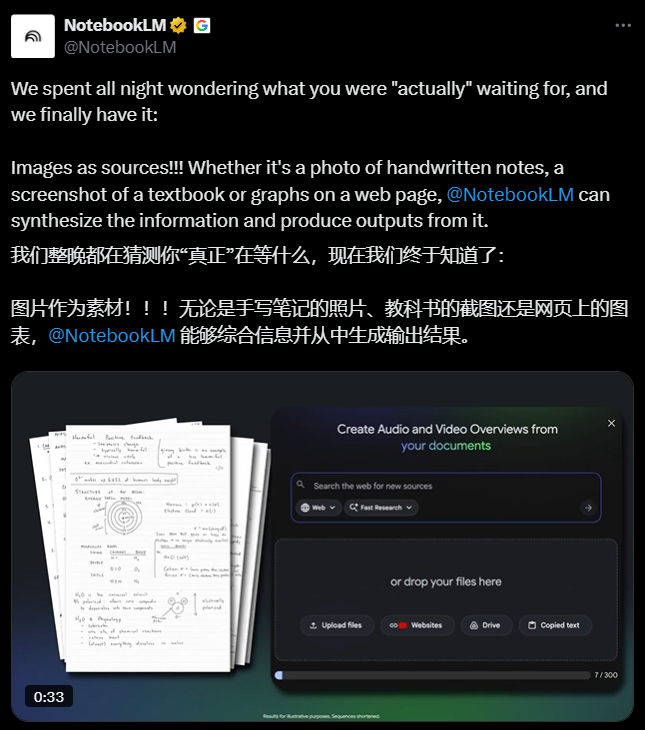

Google announced that NotebookLM has added image data sources. After users upload blackboard notes, textbook scan pages, or street photos, the system automatically performs OCR and semantic analysis, allowing users to directly search for content in the images using natural language. This feature is now available for free across all platforms. Google stated that in the coming weeks, it will add local processing options to reduce the need to upload sensitive information to the cloud.

The new NotebookLM uses a multimodal model at its core, which can distinguish between handwritten and printed areas, extract table structures, and automatically associate them with existing text, audio, and video notes. Google demonstrated use cases: after taking a photo of classroom board notes, asking "How is the formula in the lower left corner derived?" the system immediately locates the formula and generates step-by-step explanations; after scanning page 127 of a textbook, you can directly query cell values; uploading a menu from a street coffee shop allows you to extract the price of lattes.

Google said that within 48 hours after the feature was launched, the number of images uploaded by educational accounts exceeded 500,000 pages, an increase of 340% compared to the previous period. The company plans to integrate an AR glasses real-time shooting interface into NotebookLM next year, enabling "ask anything you see." Currently, image processing uses existing free quotas, and it has not revealed whether a paid acceleration channel will be introduced.