Recently, ByteDance announced the launch of a new InfinityStar framework, which significantly improves video generation efficiency, reducing the time to generate a 5-second 720p video to just 58 seconds. This innovation not only enhances generation speed but also supports various visual generation tasks through a unified architecture, including image generation, text-to-video generation, and video continuation.

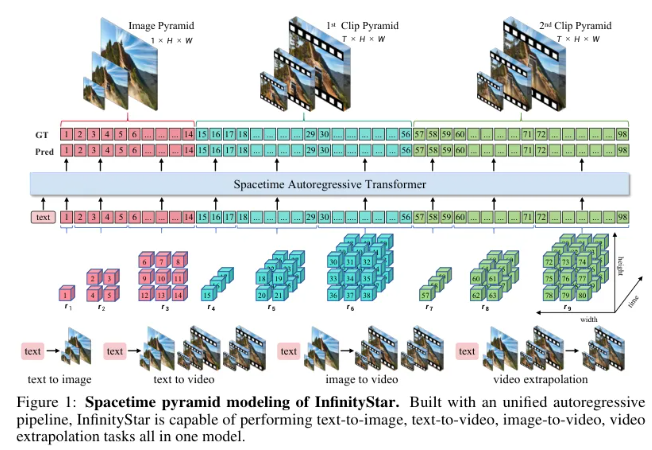

The design of the InfinityStar framework is based on a deep understanding of the essence of video data. Unlike traditional models that treat videos as a single 3D data block, InfinityStar adopts a spatiotemporal pyramid model, explicitly separating spatial scales from the time dimension. This design allows the model to more effectively decouple appearance information from dynamic motion information when processing videos, greatly improving generation quality.

To further improve generation efficiency, InfinityStar introduces a knowledge inheritance strategy, using a pre-trained variational autoencoder (VAE) as a foundation. Through this approach, the new model can quickly learn high-quality video features, significantly reducing training time and computational resource consumption.

Experiments show that InfinityStar maintains excellent visual quality while achieving extremely high generation speed. The release of this framework marks an important advancement in visual generation technology and lays the foundation for future long video generation and diverse task processing.

github:https://github.com/FoundationVision/InfinityStar

Key Points:

- 🚀 The InfinityStar framework reduces 720p video generation time to 58 seconds, significantly improving efficiency.

- 🏗️ It uses a spatiotemporal pyramid model to effectively decouple appearance and motion information, improving generation quality.

- 📈 It introduces a knowledge inheritance strategy, using a pre-trained model to accelerate learning and reduce computational costs.