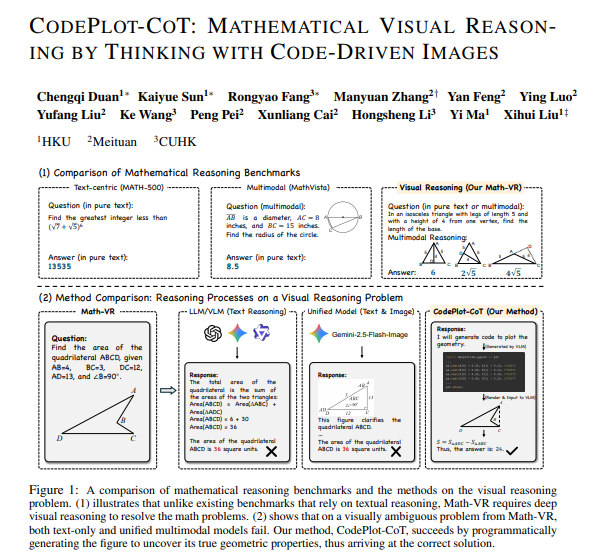

Large language models have long had clear shortcomings in solving math geometry problems. Whether it's GPT-4.1 or Gemini-2.5-Pro, they perform well in tasks such as writing and programming, but when faced with math problems that require drawing auxiliary lines or function graphs, they often make frequent errors.

The root of the problem lies in the fact that large models are linguistic geniuses, not geometers. They excel at pure text-based reasoning chains, capable of step-by-step derivation of formulas, but struggle to accurately draw images in their minds and draw conclusions from them, often providing incorrect answers.

Recently, a paper titled "CODEPLOT-COT: MATHEMATICAL VISUAL REASONING BY THINKING WITH CODE-DRIVEN IMAGES" released by the team from the University of Hong Kong and Meituan has provided an innovative solution to this challenge. They found a way for large models to think while drawing, and the drawings are extremely accurate.

Past research attempted visual reasoning chains, letting models generate or manipulate images directly to assist reasoning. However, this approach did not work well in the field of mathematics. Natural images focus on pixel-level details like texture and lighting, while mathematical graphics require absolute precision, ensuring angles, segment ratios, and point positions strictly conform to geometric constraints. Asking AI to directly generate images that meet strict geometric constraints is like asking a freehand painter to draw engineering diagrams precise to the millimeter — completely different things. Generative models tend to distort when dealing with high-dimensional pixel distributions, failing to guarantee the precision and controllability required for mathematics.

The core innovation of this paper lies in the idea that since direct image generation is unreliable, why not let the large model do what it does best — writing code? The team proposed the CodePlot-CoT code-driven reasoning paradigm.

The specific process works like this. First, the large model receives a math problem and performs reasoning. When the reasoning process requires drawing auxiliary lines or function graphs, the model does not generate images, but instead generates executable drawing code, such as Python's Matplotlib code. This code is then executed in a Python renderer, instantly generating an accurate geometric figure. Finally, the model reinputs this image generated by code back into the reasoning chain, continuing text reasoning until it reaches the final answer.

This method cleverly transforms the difficult-to-control image generation problem into a language modeling problem that the large model excels at. The core structural properties of mathematical graphics, such as shape, position, and angle, can be perfectly expressed through structured code, effectively avoiding interference from pixel-level details.

To train such a model, the team developed two powerful tools. The first is the Math-VR dataset, containing 178,000 bilingual math problems. Unlike previous benchmarks, where the figures were already drawn and only required describing the image, Math-VR requires the model to actively draw and think. For example, a problem about an isosceles triangle might require considering three scenarios, and the model must draw three different figures for analysis. In terms of subject distribution, geometry accounts for an overwhelming 81%.

The second tool is the MatplotCode converter, a specialized image-to-code translation tool for mathematical graphics, capable of converting mathematical figures into Python plotting code with high fidelity. Even top commercial models like Gemini-2.5-Pro and GPT-5 fail to reliably convert complex mathematical graphics into accurate plotting code in a zero-shot setting. Experiments show that this converter outperforms existing models in both code generation success rate and image reconstruction fidelity.

Experimental results confirm the effectiveness of this code-as-reasoning paradigm. On the Math-VR benchmark, CodePlot-CoT achieved a performance improvement of up to 21% over base models. More notably, even larger parameter closed-source models like Gemini-2.5-Pro still got about one-third of the questions wrong on this new benchmark. This strongly proves that simply increasing model size and the length of text-based reasoning chains is not enough. To truly solve visual math reasoning problems, controlled, precise, and verifiable code-driven visual reasoning is key.

The success of CodePlot-CoT is not just another advanced model, but also opens up a new direction for multimodal math reasoning. It demonstrates that in fields requiring high precision and strong logic, such as scientific computing and engineering design, large models should not focus on imitating human brushstrokes, but rather leverage their programming capabilities to build an exact and controllable digital world, and then perform reasoning and verification within that world.

The team has open-sourced all datasets, code, and pre-trained models, providing valuable resources for the entire AI community. This marks an important step forward for large models in reasoning about geometry problems. Through code-driven visual reasoning methods, AI has finally found an effective way to solve mathematical geometry problems.

Paper link: https://arxiv.org/pdf/2510.11718