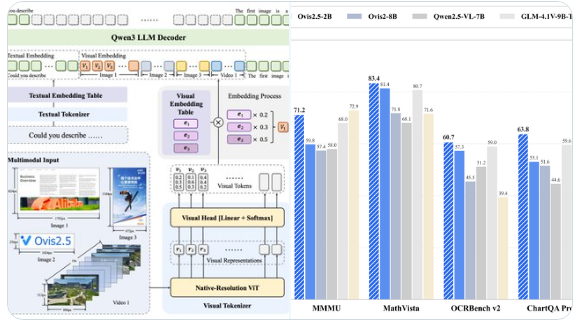

The AI team of Alibaba International Digital Trade Group (AIDC), AIDC-AI, recently released the new multimodal large language model Ovis2.5, available in 9B and 2B parameter scale versions. Positioned as an economical visual reasoning solution, the model demonstrates outstanding performance within its scale, setting a new benchmark for multimodal AI applications.

Key Features of Ovis2.5

1. **Natively Resolution-Aware**: Ovis2.5 uses the NaViT visual encoder, which retains fine details and global structure of images without tiling loss, ensuring high-quality visual processing capabilities.

2. **Deep Reasoning Capabilities**: The model supports an optional "thinking mode," possibly partially reusing technical features from Alibaba's Qwen3. In addition to linear thinking chain (CoT) reasoning, Ovis2.5 can perform self-checking and revision, and supports configurable thinking budgets to improve the accuracy of problem-solving.

3. **Leading Chart and Document OCR**: On both 9B and 2B scales, Ovis2.5 achieves industry-leading levels in complex chart analysis, document understanding (including tables and forms), and optical character recognition (OCR), providing strong support for real-world application scenarios.

4. **Broad Task Coverage**: The model performs well in image reasoning, video understanding, and visual localization benchmark tests, demonstrating strong general multimodal capabilities.

The release of Ovis2.5 highlights AIDC-AI's continuous innovation in the field of multimodal AI technology. By achieving high performance within a compact model size, Ovis2.5 provides developers and enterprises with an efficient and easy-to-deploy solution, particularly suitable for scenarios that require the integration of visual and text reasoning. The model is open-sourced on platforms such as GitHub and Hugging Face, further promoting collaboration and innovation within the global AI community.

This release marks another significant advancement by AIDC-AI based on the Ovis series of models, injecting new vitality into the development of multimodal large language models.