Recently, a new AI evaluation benchmark called FormulaOne has attracted widespread attention. This benchmark, introduced by the research institution AAI, which focuses on superintelligence and advanced AI systems, challenged a number of top AI models such as GPT-5, Grok4, and o3Pro. The results were astonishing: all these models scored zero in the tests!

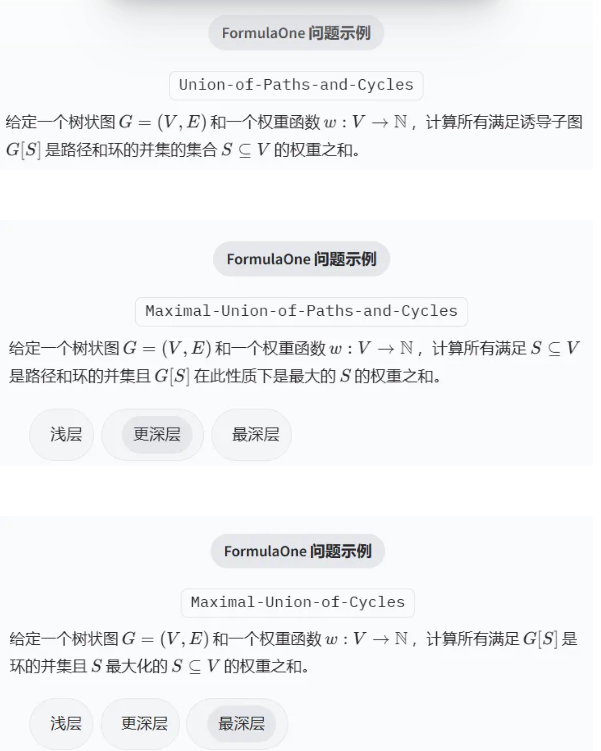

The FormulaOne benchmark includes 220 novel graph-structured dynamic programming problems, with difficulty levels ranging from moderate to research-level. These include complex areas such as topology, geometry, and combinatorics. Although the problem statements may seem simple, solving them requires highly complex reasoning and logical deduction, almost reaching the level of a doctoral challenge.

This series of problems relies on an algorithmic meta-theorem proposed by Courcelle, which emphasizes that for each tree-like graph, any problem definable in logic can be solved using dynamic programming algorithms. This requires the use of a structure known as tree decomposition, which organizes the vertices of a graph into a series of overlapping sets arranged in a tree-like structure, and then solves them step by step using dynamic programming.

On shallow-level problems, these cutting-edge AI models performed reasonably well, achieving success rates between 50% and 70%, indicating that they have some understanding of these types of problems. However, the situation is not so optimistic for deeper and more challenging questions. In the deep-level tests, the success rate of top models dropped significantly, with models like Grok4 and Gemini-Pro managing to solve only 1% of the questions, while GPT-5Pro performed slightly better, solving only four questions. In the most profound level of testing, all models had a success rate of zero, resulting in a collective failure.

This evaluation result has sparked wide discussion in the academic community and raised doubts about the true capabilities of AI models. Many people even suggested that human PhD students should also participate in the assessment. With the rapid development of AI technology, we must ask ourselves: how far are these models from truly achieving "doctoral-level" reasoning abilities?

Model address: https://huggingface.co/spaces/double-ai/FormulaOne-Leaderboard

Key points:

✅ AI models such as GPT-5 scored zero in the new evaluation benchmark FormulaOne, which is shocking!

✅ FormulaOne contains 220 high-difficulty dynamic programming problems, testing the reasoning ability of AI models.

✅ Most models performed reasonably well on shallow problems, but failed on deeper and more challenging problems, revealing the limitations of AI.