With the rapid development of AI technology, the quality of video generation is improving at an astonishing speed, evolving from initially blurry clips to highly realistic generated videos. However, the lack of control and editing capabilities for generated videos remains a critical issue that needs to be addressed. It was not until NVIDIA and its partners introduced DiffusionRenderer in their latest research that a new solution emerged for this challenge.

DiffusionRenderer is a groundbreaking research achievement that not only generates videos but also understands and manipulates 3D scenes within them. This model integrates generation and editing, greatly unlocking the potential of AI-driven content creation. Previous technologies, such as physically based rendering (PBR), have shown excellent performance in generating high-fidelity videos, but they were powerless when it came to scene editing. DiffusionRenderer processes 3D scenes in a unique way, breaking through this limitation.

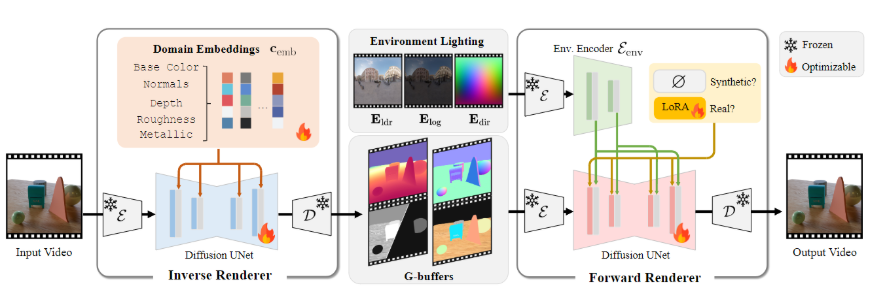

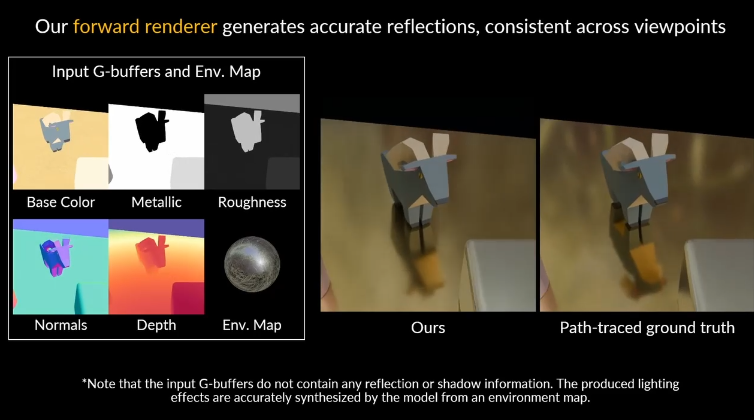

The model uses two neural renderers. The first is a neural inverse renderer, which analyzes the input video, extracts geometric and material properties of the scene, and generates the required data buffers; the second is a neural forward renderer, which combines this data with the desired lighting to generate high-quality realistic videos. Their collaboration enables DiffusionRenderer to demonstrate strong adaptability when processing real-world data.

The research team designed a unique data strategy for DiffusionRenderer, building a large synthetic dataset containing 150,000 videos as the foundation for the model's learning. At the same time, they used a real-world video dataset containing 10,510 videos to automatically generate scene attribute labels, allowing the model to better adapt to the characteristics of real videos.

DiffusionRenderer's performance is impressive, showing advantages over other methods in multiple task comparisons. It not only generates more realistic lighting effects in complex scenes but can also accurately estimate the material properties of scenes during reverse rendering.

The practical application potential of this technology is vast. Users can perform dynamic lighting, material editing, and seamless object insertion through DiffusionRenderer. Users only need to provide a video to easily modify and recreate the scene. The release of this technology marks a significant leap in the field of video rendering and editing, granting more creators and designers greater creative freedom.

- Demo Video https://youtu.be/jvEdWKaPqkc

- github : https://github.com/nv-tlabs/cosmos1-diffusion-renderer

- Project Page: https://research.nvidia.com/labs/toronto-ai/DiffusionRenderer/

Key Points:

🌟 DiffusionRenderer brings new possibilities to 3D scene creation by combining generation and editing functions.

🎥 The model leverages the collaboration between the neural inverse renderer and the neural forward renderer, enhancing the realism and adaptability of video rendering.

🚀 Its practical applications include dynamic lighting, material editing, and object insertion, enabling creators to easily engage in video creation.