Recently, Mistral AI has partnered with All Hands AI to launch the Devstral2507 series of large language models for developers, including two new models: Devstral Small1.1 and Devstral Medium2507. These models are designed to support code reasoning, program synthesis, and structured task execution based on intelligent agents, suitable for practical applications in large software code repositories. This release has been optimized for performance and cost, making it highly applicable in development tools and code automation systems.

Devstral Small1.1 is an open-source model based on the Mistral-Small-3.1 base model, with approximately 24 billion parameters. It supports a 128k context window, enabling processing of multi-file code input and complex long prompts, aligning with the characteristics of software engineering workflows. This version has been fine-tuned specifically for structured outputs, including XML and function call formats, making it compatible with agent frameworks like OpenHands, suitable for tasks such as program navigation, multi-step editing, and code search. The license for Devstral Small1.1 is Apache 2.0, supporting both research and commercial use.

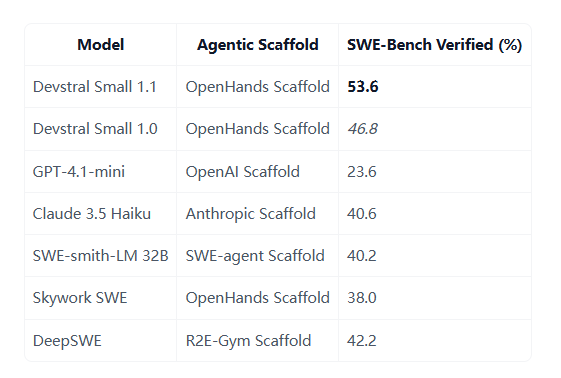

In performance testing, Devstral Small1.1 achieved a score of 53.6% on the SWE-Bench Verified benchmark, proving its excellent performance in generating correct patches for real GitHub issues. Although its performance does not match that of large commercial models, it strikes a balance between size, inference cost, and inference capability, making it suitable for various coding tasks.

In addition, the model is released in multiple formats, including quantized versions that can run locally on high-memory GPUs (such as RTX4090) or Apple Silicon machines with more than 32GB RAM. At the same time, Mistral also provides the model through its inference API, with current pricing the same as the Mistral-Small series models.

Devstral Medium2507 is available only through the Mistral API or enterprise deployment agreements and is not open source. This model scored 61.6% on the SWE-Bench Verified benchmark and demonstrated excellent long-context reasoning capabilities, surpassing some commercial models such as Gemini 2.5 Pro and GPT-4.1. The API pricing for this model is higher than the Small version, but its strong reasoning capabilities make it ideal for executing tasks in large code repositories.

Devstral Small is more suitable for local development, experimentation, or integration into client-side development tools, while Devstral Medium offers higher accuracy and consistency in structured code editing tasks, making it suitable for high-performance production services. Both models are designed to support integration with code agent frameworks, enabling the simplification of automated workflows for test generation, refactoring, and error fixing.

Through this release, Mistral AI's Devstral2507 series provides developers with different options to meet diverse software engineering needs, from experimental agent development to practical deployment in commercial environments, all effectively supported.

huggingface:https://huggingface.co/mistralai/Devstral-Small-2507

Key Points:

🌟 The Devstral2507 series includes the open-source Devstral Small1.1 and the enterprise version Devstral Medium2507, aimed at enhancing code reasoning and automation capabilities.

🚀 Devstral Small1.1 scored 53.6% on the SWE-Bench benchmark, while Devstral Medium2507 scored 61.6%, with the latter performing better than some commercial models.

💼 Both models support integration with code agent frameworks, suitable for a variety of application scenarios from local development to enterprise-level services.