Welcome to the "AI Daily" section! Here is your guide to exploring the world of artificial intelligence every day. Every day, we present you with the latest content in the AI field, focusing on developers, helping you understand technology trends and innovative AI product applications.

New AI products click to learn more:https://app.aibase.com/zh

1. Latency below 250 milliseconds! MiniMax Speech 2.6 released, Fluent LoRA allows one-click replication of any voice, bringing speech synthesis into the real-time interaction era

MiniMax Speech 2.6 was released, pushing speech synthesis into the real-time interaction era with low latency and voice cloning technology.

【AiBase Summary:】

🎙️ With Fluent LoRA technology, it only takes 30 seconds of audio to clone a voice.

⏱️ Achieve end-to-end latency below 250 milliseconds, close to human conversation rhythm.

🌐 Supports multiple scenarios such as education, customer service, and smart hardware.

2. Ant Group's Agentar creates a "Financial AI Brain", selected as an international standard excellence case

The article introduces the Agentar knowledge engineering KBase case developed by Ant Group and Ningbo Bank, which has been selected as an excellence case in international standard financial applications. This solution solves the problem of knowledge silos in financial institutions through knowledge engineering technology, building an intelligent decision-making system that significantly improves service efficiency and accuracy, and has strong explainability, setting a new benchmark for the intelligent upgrade of the financial industry.

【AiBase Summary:】

🧠 The Agentar knowledge engineering platform realizes full lifecycle management of multi-source heterogeneous data.

💡 The system enhances knowledge quality and AI logical reasoning capabilities through the "planning-retrieval-inference" mechanism.

🔒 Strong explainability ensures safe and compliant application of generative AI in the financial field.

3. Zhiyuan releases Emu3.5 large model: Reconstructing multimodal intelligence with "next state prediction", impressive embodiment operation capabilities impress the industry

Zhiyuan released the Emu3.5 large model, which reconstructs multimodal intelligence through "next state prediction" and has powerful embodiment operation capabilities, marking a key step from perception and understanding to intelligent operations in AI.

【AiBase Summary:】

🧠 Emu3.5 introduces a self-regressive "next state prediction" (NSP) framework, achieving breakthroughs in multimodal sequence modeling.

🖼️ Supports text-image collaborative generation, intelligent image editing, and spatiotemporal dynamic reasoning, enhancing cross-modal operational capabilities.

🔄 Breaks information silos, unifies encoding of text, vision, and actions, enabling free switching and collaborative reasoning across modalities.

4. Cursor 2.0震撼发布!自研模型Composer快4倍,8个AI Agent并行编码,开发者效率迎来“核爆级”升级

Cursor 2.0's release marks a paradigm shift from an intelligent completion plugin to a multi-agent collaborative development platform, significantly improving development efficiency and quality through its self-developed Composer model and multi-Agent interface.

【AiBase Summary:】

🧠 The Composer model is specifically designed for agent-based coding, using reinforcement learning and a mixture of experts architecture, increasing response speed by four times.

ParallelGroup Multiple AI Agents work in parallel, supporting independent task processing, improving development efficiency for complex projects.

🔄 Full-process automation features integrate code review, testing, and execution, reducing context switching and enhancing developer focus.

5. xAI upgrades Grok Imagine iOS version: New video generation and prompt remixing

xAI announced that the iOS version of its Grok Imagine tool will introduce a video generation feature, allowing users to generate high-definition dynamic videos through text or image prompts and remix prompts directly from content summaries. This feature is optimized based on the Aurora/Grok core model, improving operational smoothness, suitable for short films, advertisements, and creative content.

【AiBase Summary:】

🎥 New video generation feature, supports generating high-definition dynamic videos through text or image prompts.

🔄 Prompt remixing mechanism lowers the creation barrier, allowing rapid iteration of creation.

📱 iOS first update, Android and web versions follow, enhancing mobile AI creation capabilities.

6. OpenAI launches new security model gpt-oss-safeguard, helping the AI field flexibly respond to risks

OpenAI's gpt-oss-safeguard series models provide higher flexibility and customizability in the field of AI security, able to classify and provide reasoning based on developers' set security policies. However, these models have certain limitations in terms of processing speed and resource consumption, so they may not perform as well as traditional classifiers in some scenarios.

【AiBase Summary:】

🛡️ OpenAI launched two new security models, gpt-oss-safeguard-120b and gpt-oss-safeguard-20b, allowing flexible customization of security policies.

⚙️ The new models can classify user messages and conversations based on input security policies and provide reasoning.

📊 Although the new models have advantages, in some cases, traditional classifiers may be more effective, and the new models consume more resources.

Details link: https://huggingface.co/collections/openai/gpt-oss-safeguard

7. TikTok launches AI editing new tool “Smart Split”, helping creators easily edit and plan content

TikTok launched three new features at the U.S. Creators Summit, including the AI-driven video editing tool "Smart Split", the content planning tool "AI Outline", and an updated creator revenue-sharing policy, aiming to improve creators' efficiency and monetization capabilities.

【AiBase Summary:】

🎥 TikTok launched the AI editing tool "Smart Split", automatically generating short videos and subtitles.

📝 The new content planning tool "AI Outline" helps creators easily generate video outlines.

💰 Upgraded revenue-sharing policy allows top creators to receive up to 90% of the revenue share.

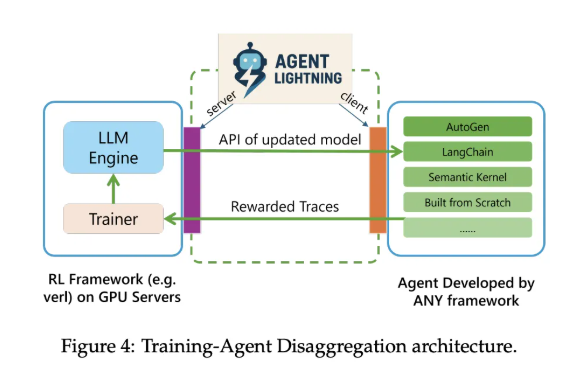

8. Microsoft launches Agent Lightning: A new AI framework to help train large language models with reinforcement learning

Microsoft's Agent Lightning is an open-source framework aimed at optimizing multi-agent systems through reinforcement learning without needing to restructure existing architectures, thus improving the performance of large language models.

【AiBase Summary:】

🧠 Agent Lightning models agents as partially observable Markov decision processes, enhancing strategy performance.

🚀 The framework supports optimizing multi-agent systems without restructuring existing systems, achieving training decoupling.

📈 Experiments show significant performance improvements in tasks such as text-to-SQL, retrieval-augmented generation, and math question answering.

Details link: https://arxiv.org/abs/2508.03680v1