The Tongyi Qianwen team has officially open-sourced its latest image editing model, Qwen-Image-Edit, which is another major breakthrough in the image generation and editing field of the Tongyi Qianwen series, following Qwen-Image. As a foundational image editing model based on a 20B-parameter multimodal diffusion transformer (MMDiT), Qwen-Image-Edit demonstrates outstanding performance in precise text editing, semantic and appearance editing, especially achieving industry-leading results in Chinese text rendering.

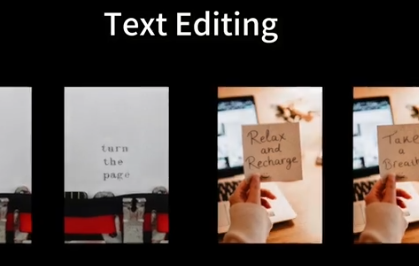

Breakthrough Text Editing: Bilingual Accurate Rendering

Qwen-Image-Edit inherits the core advantages of Qwen-Image and further upgrades its text rendering capabilities. Whether it's English or Chinese, it can achieve high-fidelity text editing, supporting direct addition, deletion, or modification of text within images while preserving the original font, size, and style. Especially in Chinese scenarios, the model can handle multi-line layouts, paragraph-level text generation, and complex layout requirements like calligraphy couplets, with a single-character rendering accuracy rate as high as 97.29%, far exceeding other top models such as Seedream3.0 (53.48%) and GPT Image1 (68.37%).

For example, Qwen-Image-Edit can easily replace "Hope" on a poster with "Qwen" or correct an error character in a calligraphy work while maintaining the overall visual consistency of the image. This precise text editing capability makes it highly promising in advertising design, brand promotion, and content creation.

Dual Encoding Mechanism: Perfect Balance Between Semantic and Appearance

The core technological innovation of Qwen-Image-Edit lies in its dual encoding mechanism. During the image editing process, the input image is simultaneously encoded by the Qwen2.5-VL model to extract high-level scene and object relationship features, and by a variational autoencoder (VAE) for reconstruction encoding to retain low-level visual details such as textures and colors. This mechanism ensures that when executing complex editing instructions, the model can understand semantic intent while maintaining visual fidelity.

For instance, in semantic editing, Qwen-Image-Edit can adjust a person's posture in an image to "bending down to hold a dog's paw," while keeping the person's identity and background consistent; in appearance editing, it can accurately add elements (such as signs with realistic reflections) or remove fine details (such as hair strands), while keeping other areas unchanged. This "semantic + appearance" dual control makes it particularly outstanding in scenarios such as IP creation, style transfer, and new perspective synthesis.

Multi-task Training: Leading Consistency in Editing

Through an enhanced multi-task training paradigm, Qwen-Image-Edit supports various tasks such as text-to-image (T2I), image-to-image (I2I), and text-guided image editing (TI2I). The model achieves SOTA performance on image editing benchmarks such as GEdit, ImgEdit, and GSO, with comprehensive scores of 7.56 (English) and 7.52 (Chinese), surpassing competitors like GPT Image1 and FLUX.1Kontext.

Notably, the "chain editing" capability of Qwen-Image-Edit is particularly prominent. For example, in a calligraphy correction scenario, the model can iteratively correct erroneous characters through multiple rounds while maintaining the overall style consistency. This ability significantly improves the efficiency of creation and lowers the barrier for professional visual content creation.

Open Source Empowerment: Promoting the Global AI Creation Ecosystem

Qwen-Image-Edit is fully open-sourced under the Apache 2.0 license, allowing users to freely access the model weights through platforms such as Hugging Face and ModelScope, or experience it online via the "Image Editing" feature in Qwen Chat. Alibaba also provides native support in ComfyUI and has released detailed technical reports and quick start guides to help developers integrate it quickly.

On social media, developers have shown great enthusiasm for the release of Qwen-Image-Edit, calling it "raising the level of Chinese rendering and image editing to commercial standards," with some users even stating that its performance "matches or even exceeds GPT-4o and FLUX.1." Additionally, the model supports various LoRA models (such as MajicBeauty LoRA), further expanding its application scenarios in high-realism image generation.

Application Scenarios: From Creative Design to Commercial Implementation

The multifunctional characteristics of Qwen-Image-Edit make it suitable for various scenarios, including but not limited to:

- Poster and Advertising Design: Generate visually striking promotional posters, supporting complex text formatting and style transfer.

- IP Content Creation: Generate MBTI-themed emoticons based on brand mascots (such as Qwen's Capybara), maintaining character consistency.

- Educational and Training: Quickly generate high-quality illustrations and charts to enhance the visual appeal of course content.

- Gaming and Film: Support character design, background generation, and new perspective synthesis, optimizing asset development processes.

User feedback indicates that Qwen-Image-Edit's intuitive operation and high-quality output make it an ideal tool for non-professional designers. For example, a content creator said, "Qwen-Image-Edit allows me to complete marketing visual design in minutes, with accurate text rendering and effects comparable to professional software."

As the latest achievement from the Tongyi Qianwen team, Qwen-Image-Edit sets a new benchmark in the field of AI image generation and editing with its strong text editing capabilities, dual encoding mechanism, and open-source nature. Whether it's the leading performance in Chinese text rendering or the balanced performance in semantic and appearance editing, Qwen-Image-Edit demonstrates its strength as an industry-leading model.

GitHub: https://github.com/QwenLM/Qwen-Image